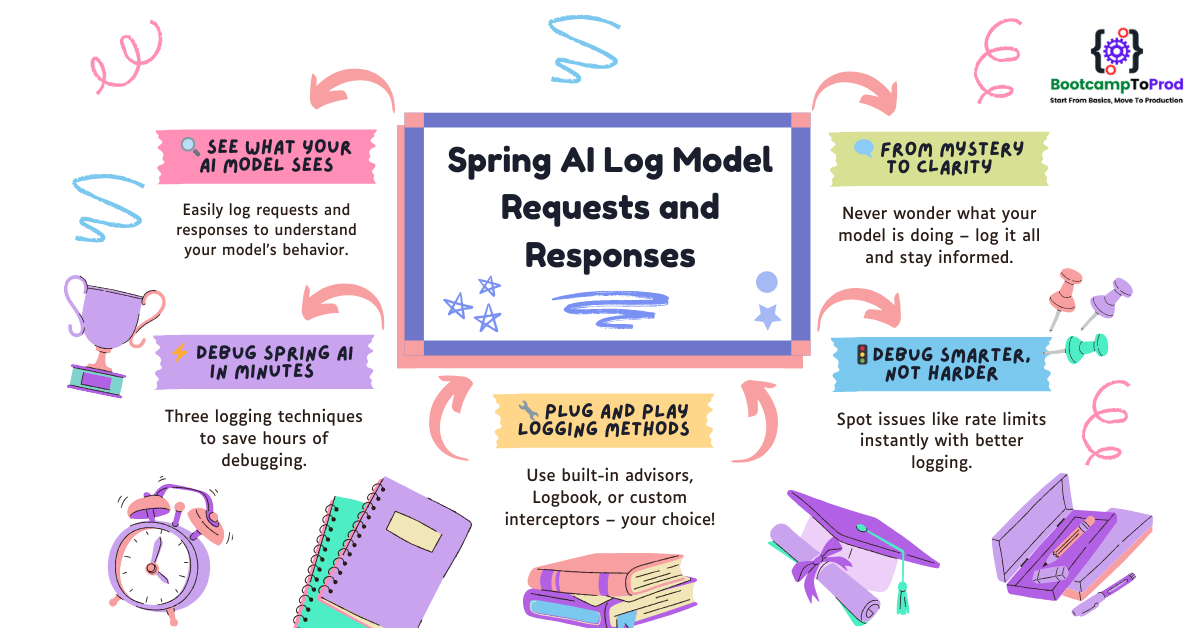

This guide on Spring AI Log Model Requests and Responses covers three simple ways to capture and log request and response data for better observability and debugging.

1. Introduction

Hey there! Today, I want to walk you through a little debugging adventure I went on while building a chat application with Spring Boot and AI models. If you’re just getting started with Spring AI, this guide will help you understand how to properly log your requests and responses when things go off track.

2. The Mystery of the Missing Response

Last week, I was experimenting with a basic chat app using Spring Boot and Spring AI. My goal was to connect it with OpenRouter and use Google’s Gemini model. Everything was going smoothly until… it wasn’t. Suddenly, my app stopped responding. No errors, no responses, just silence.

All I could see in the logs was this warning:

WARN 7080 --- [nio-8080-exec-9] o.s.ai.openai.OpenAiChatModel : No choices returned for prompt: Prompt{... model="google/gemini-2.0-flash-exp:free", ...}

No choices returned? What does that even mean?

After some frustrating hours, I finally discovered that I had hit the rate limit for the free tier of the model. The OpenRouter API was actually sending back a proper error, but my app wasn’t logging it clearly. This made me realize how crucial it is to log both the request being sent to the AI model and the response (especially errors!).

3. The Logging Journey Begins

While digging around for better ways to log requests and responses in Spring AI, I came across some really helpful resources:

- A GitHub discussion on logging AI requests and responses

- A GitHub issue comment with insights on advanced logging

- The official Spring AI documentation on logging support

Thanks to these, I discovered three different approaches to add detailed logging to my Spring AI application. Let’s go through each one using a simple demo setup!

4. Our Demo Application

We will build a simple Spring Boot chat application that integrates with Spring AI to interact with the Gemini model using OpenRouter.

Let’s start building it step by step!

Step 1: Add Spring AI Dependencies to Your Project

Set up a Spring Boot project with the necessary dependencies in pom.xml:

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>These dependencies provide:

- spring-boot-starter-web: Basic web application functionality for creating REST endpoints

- spring-ai-starter-model-openai: This starter makes it easy to integrate AI capabilities into your Spring Boot app. While it’s named “OpenAI”, it’s also compatible with other providers like OpenRouter — allowing us to interact with Google Gemini’s free AI models using the same unified Spring AI APIs.

- spring-ai-bom: The

dependencyManagementsection uses Spring AI’s Bill of Materials (BOM) to ensure compatibility between Spring AI components.

Step 2: Configure Application Properties

Open the application.yml file and add the following configurations, replacing <your-openrouter-api-key> with your API key :

spring:

application:

name: spring-boot-ai-logging-demo

ai:

openai:

api-key: "<your-openrouter-api-key>"

base-url: 'https://openrouter.ai/api'

chat:

options:

model: google/gemini-2.0-flash-exp:free spring.ai.openai.api-key: "<your-api-key>"- The API key used to authenticate with the OpenAI-compatible provider.

- Required to authorize and send requests to the AI model.

spring.ai.openai.base-url: "https://openrouter.ai/api"- Overrides the default OpenAI endpoint.

- Routes all AI requests through OpenRouter, which acts as a proxy supporting multiple models.

spring.ai.openai.chat.options.model: google/gemini-2.0-flash-exp:free- Specifies the AI model used for chat interactions.

- Here, it’s set to use Google’s Gemini 2.0 Flash (experimental free version) via OpenRouter.

Step 3: Creating the ChatClient Configuration

To interact with Google Gemini via OpenRouter in a Spring Boot application, you need to configure a chat client using Spring AI. This client enables seamless communication with the AI model, allowing you to send prompts and receive responses dynamically.

Below is the configuration for a basic chat client:

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.tool.ToolCallbackProvider;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class ChatClientConfig {

@Bean

public ChatClient chatClient(ChatClient.Builder chatClientBuilder) {

return chatClientBuilder.build();

}

}Explanation:

This configuration registers a ChatClient as a Spring Bean. The ChatClient.Builder is used to create an instance of the client, which can then be used in the application to send queries and receive responses from Google Gemini via OpenRouter. This setup ensures that AI interactions are handled efficiently within the Spring Boot framework.

Step 4: Building a Controller

Let’s create a simple REST controller to interact with the configured Gemini model for basic chat:

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/chat")

public class ChatController {

private final ChatClient chatClient;

@Autowired

public ChatController(ChatClient chatClient) {

this.chatClient = chatClient;

}

@PostMapping("/ask")

public String chat(@RequestBody String userInput) {

return chatClient.prompt(userInput).call().content();

}

}Our controller:

- Exposes a

/chat/askendpoint that accepts POST requests - Takes the user’s input as the request body

- Then it calls prompt() on the ChatClient, which processes the input and returns the final response.

- Returns the model’s response as a string

When you send a POST request, the controller will forward your message to the Gemini API via OpenRouter and return the response.

Now, let’s see how we can add logging to this application using three different approaches, from simplest to most advanced.

5. Approach 1: Quick and Easy Logging with SimpleLoggerAdvisor

The easiest way to start logging is using Spring AI’s built-in SimpleLoggerAdvisor. Think of it as adding a helpful assistant who writes down everything your application says to the AI model and everything the AI model says back.

Step 1: Update your controller

@PostMapping("/ask")

public String chat(@RequestBody String userInput) {

return chatClient.prompt(userInput)

.advisors(new SimpleLoggerAdvisor()) // Add this line

.call().content();

}What changed: We added .advisors(new SimpleLoggerAdvisor()) to our ChatClient call. This tells Spring AI to log the conversation.

Step 2: Update your application.yml

logging:

level:

org.springframework.ai.chat.client.advisor: DEBUG

What this does: Sets the logging level to DEBUG for the advisor package, which enables the SimpleLoggerAdvisor to show detailed logs.

Step 3: Verify the output

With this simple change, you’ll now see logs that show:

- The exact prompt you’re sending to the AI model

- The complete response coming back from the model

If I had done this from the start, I would have immediately seen:

2025-04-05T22:52:18.285+05:30 DEBUG 10625 --- [spring-boot-ai-logging-demo] [io-8080-exec-10] o.s.a.c.c.advisor.SimpleLoggerAdvisor : request: AdvisedRequest[chatModel=OpenAiChatModel [defaultOptions=OpenAiChatOptions: {"streamUsage":false,"model":"google/gemini-2.0-flash-exp:free","temperature":0.7}], userText=hey how are you?

, systemText=null, chatOptions=OpenAiChatOptions: {"streamUsage":false,"model":"google/gemini-2.0-flash-exp:free","temperature":0.7}, media=[], functionNames=[], functionCallbacks=[], messages=[], userParams={}, systemParams={}, advisors=[org.springframework.ai.chat.client.DefaultChatClient$DefaultChatClientRequestSpec$1@2b05981a, org.springframework.ai.chat.client.DefaultChatClient$DefaultChatClientRequestSpec$2@29665198, org.springframework.ai.chat.client.DefaultChatClient$DefaultChatClientRequestSpec$1@3c9a4f19, org.springframework.ai.chat.client.DefaultChatClient$DefaultChatClientRequestSpec$2@325da6fa, SimpleLoggerAdvisor], advisorParams={}, adviseContext={}, toolContext={}]

2025-04-05T22:52:21.432+05:30 WARN 10625 --- [spring-boot-ai-logging-demo] [io-8080-exec-10] o.s.ai.openai.OpenAiChatModel : No choices returned for prompt: Prompt{messages=[UserMessage{content='hey how are you?

', properties={messageType=USER}, messageType=USER}], modelOptions=OpenAiChatOptions: {"streamUsage":false,"model":"google/gemini-2.0-flash-exp:free","temperature":0.7}}

2025-04-05T22:52:21.453+05:30 DEBUG 10625 --- [spring-boot-ai-logging-demo] [io-8080-exec-10] o.s.a.c.c.advisor.SimpleLoggerAdvisor : response: {

"result" : null,

"results" : [ ],

"metadata" : {

"id" : "",

"model" : "",

"rateLimit" : {

"tokensLimit" : 0,

"tokensReset" : 0.0,

"requestsLimit" : 0,

"requestsRemaining" : 0,

"requestsReset" : 0.0,

"tokensRemaining" : 0

},

"usage" : {

"nativeUsage" : { },

"completionTokens" : 0,

"promptTokens" : 0,

"totalTokens" : 0,

"generationTokens" : 0

},

"promptMetadata" : [ ],

"empty" : true

}

}

This would have saved me hours of debugging!

Pros of this approach:

- Super simple to implement – just 2 changes

- Built into Spring AI – no extra dependencies

- Shows both prompts and responses

Cons:

- Only shows the AI conversation, not the HTTP details

- Limited customization options

6. Approach 2: See the Full HTTP Story with Logbook

While the SimpleLoggerAdvisor is great for seeing the AI conversation, sometimes we need to see more details about the HTTP communication. This is where a library called Logbook comes in handy.

Step 1: Add the dependency

Add this to your pom.xml:

<dependency>

<groupId>org.zalando</groupId>

<artifactId>logbook-spring-boot-starter</artifactId>

<version>3.11.0</version>

</dependency>The latest version of the logbook can be found here.

Step 2: Update your configuration class

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.boot.web.client.RestClientCustomizer;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.zalando.logbook.Logbook;

import org.zalando.logbook.spring.LogbookClientHttpRequestInterceptor;

@Configuration

public class ChatClientConfig {

@Bean

public ChatClient chatClient(ChatClient.Builder chatClientBuilder) {

return chatClientBuilder.build();

}

@Bean

public RestClientCustomizer restClientCustomizer(Logbook logbook) {

return restClientBuilder -> restClientBuilder.requestInterceptor(new LogbookClientHttpRequestInterceptor(logbook));

}

}What this code does:

- Creates a

RestClientCustomizerbean that adds Logbook’s interceptor to every RestClient - The interceptor will log all HTTP requests and responses

- This works because Spring AI’s ChatClient uses RestClient internally

logging:

level:

org.zalando.logbook.Logbook: TRACE

What this does: Sets Logbook’s logging level to TRACE, which shows all HTTP details.

Step 3: Verify the output

Now you’ll see much more detailed logs, including:

- Full HTTP request details (URL, method, headers)

- Complete request bodies

- HTTP response status codes

- Response headers

- Complete response bodies

With this approach, I would have seen something like:

2025-04-05T23:12:20.764+05:30 TRACE 10969 --- [spring-boot-ai-logging-demo] [nio-8080-exec-9] org.zalando.logbook.Logbook : {"origin":"remote","type":"request","correlation":"b287526a9d6c6d9d","protocol":"HTTP/1.1","remote":"0:0:0:0:0:0:0:1","method":"POST","uri":"http://localhost:8080/chat/ask","host":"localhost","path":"/chat/ask","scheme":"http","port":"8080","headers":{"accept":["*/*"],"accept-encoding":["gzip, deflate, br"],"cache-control":["no-cache"],"connection":["keep-alive"],"content-length":["16"],"content-type":["text/plain"],"host":["localhost:8080"],"postman-token":["b54de5c8-0760-4873-93da-cdd5ed29c108"],"user-agent":["PostmanRuntime/7.43.3"]},"body":"hey how are you?"}

2025-04-05T23:12:20.776+05:30 TRACE 10969 --- [spring-boot-ai-logging-demo] [nio-8080-exec-9] org.zalando.logbook.Logbook : {"origin":"local","type":"request","correlation":"b22ea937000ed462","protocol":"HTTP/1.1","remote":"localhost","method":"POST","uri":"https://openrouter.ai/api/v1/chat/completions","host":"openrouter.ai","path":"/api/v1/chat/completions","scheme":"https","port":null,"headers":{"Authorization":["XXX"],"Content-Length":["139"],"Content-Type":["application/json"]},"body":{"messages":[{"content":"hey how are you?","role":"user"}],"model":"google/gemini-2.0-flash-exp:free","stream":false,"temperature":0.7}}

2025-04-05T23:12:23.986+05:30 TRACE 10969 --- [spring-boot-ai-logging-demo] [nio-8080-exec-9] org.zalando.logbook.Logbook : {"origin":"remote","type":"response","correlation":"b22ea937000ed462","duration":309,"protocol":"HTTP/1.1","status":200,"headers":{":status":["200"],"access-control-allow-origin":["*"],"cf-ray":["92bad76bf88dff73-BOM"],"content-type":["application/json"],"date":["Sat, 05 Apr 2025 17:42:21 GMT"],"server":["cloudflare"],"x-clerk-auth-message":["Invalid JWT form. A JWT consists of three parts separated by dots. (reason=token-invalid, token-carrier=header)"],"x-clerk-auth-reason":["token-invalid"],"x-clerk-auth-status":["signed-out"]},"body":{"error":{"message":"Provider returned error","code":429,"metadata":{"raw":"{\n \"error\": {\n \"code\": 429,\n \"message\": \"You exceeded your current quota, please check your plan and billing details. For more information on this error, head to: https://ai.google.dev/gemini-api/docs/rate-limits.\",\n \"status\": \"RESOURCE_EXHAUSTED\",\n \"details\": [\n {\n \"@type\": \"type.googleapis.com/google.rpc.QuotaFailure\",\n \"violations\": [\n {\n \"quotaMetric\": \"generativelanguage.googleapis.com/generate_content_paid_tier_input_token_count\",\n \"quotaId\": \"GenerateContentPaidTierInputTokensPerModelPerMinute\",\n \"quotaDimensions\": {\n \"location\": \"global\",\n \"model\": \"gemini-2.0-pro-exp\"\n },\n \"quotaValue\": \"10000000\"\n }\n ]\n },\n {\n \"@type\": \"type.googleapis.com/google.rpc.Help\",\n \"links\": [\n {\n \"description\": \"Learn more about Gemini API quotas\",\n \"url\": \"https://ai.google.dev/gemini-api/docs/rate-limits\"\n }\n ]\n },\n {\n \"@type\": \"type.googleapis.com/google.rpc.RetryInfo\",\n \"retryDelay\": \"38s\"\n }\n ]\n }\n}\n","provider_name":"Google AI Studio"}},"user_id":"user_XX"}}

2025-04-05T23:12:23.990+05:30 WARN 10969 --- [spring-boot-ai-logging-demo] [nio-8080-exec-9] o.s.ai.openai.OpenAiChatModel : No choices returned for prompt: Prompt{messages=[UserMessage{content='hey how are you?', properties={messageType=USER}, messageType=USER}], modelOptions=OpenAiChatOptions: {"streamUsage":false,"model":"google/gemini-2.0-flash-exp:free","temperature":0.7}}

2025-04-05T23:12:24.005+05:30 TRACE 10969 --- [spring-boot-ai-logging-demo] [nio-8080-exec-9] org.zalando.logbook.Logbook : {"origin":"local","type":"response","correlation":"b287526a9d6c6d9d","duration":3241,"protocol":"HTTP/1.1","status":200,"headers":{"Connection":["keep-alive"],"Date":["Sat, 05 Apr 2025 17:42:24 GMT"],"Keep-Alive":["timeout=60"],"Transfer-Encoding":["chunked"]}}

This would have clearly shown the 429 status code

Pros of this approach:

- Shows complete HTTP communication

- Includes headers and status codes

- No custom code required

- Works with any HTTP service, not just AI models

Cons:

- Requires adding an additional dependency

- Can be verbose with lots of unneeded information

- Limited customization of format

7. Approach 3: Complete Control with a Custom Interceptor

For those who want complete control over logging, we can create our own custom interceptor. This is like building your own specialized debugging tool.

Step 1: Create a custom interceptor class

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.http.HttpRequest;

import org.springframework.http.HttpStatusCode;

import org.springframework.http.client.ClientHttpRequestExecution;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.http.client.ClientHttpResponse;

import org.springframework.util.StreamUtils;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.nio.charset.StandardCharsets;

public class AIRequestLogInterceptor implements ClientHttpRequestInterceptor {

private static final Logger logger = LoggerFactory.getLogger("AI-Communication-Logger");

@Override

public ClientHttpResponse intercept(HttpRequest request, byte[] body,

ClientHttpRequestExecution execution) throws IOException {

// Log what we're sending to the AI service

logAIRequest(request, body);

// Make the actual call to the AI service

ClientHttpResponse response = execution.execute(request, body);

// Create a wrapper that lets us read the response twice

BufferedResponseWrapper responseWrapper = new BufferedResponseWrapper(response);

// Log what the AI service sent back

logAIResponse(responseWrapper);

return responseWrapper;

}

private void logAIRequest(HttpRequest request, byte[] body) {

logger.info("➡️ AI REQUEST: {} {}", request.getMethod(), request.getURI());

logger.info("📋 HEADERS: {}", request.getHeaders());

String bodyText = new String(body, StandardCharsets.UTF_8);

logger.info("📤 BODY: {}", bodyText);

}

private void logAIResponse(ClientHttpResponse response) throws IOException {

logger.info("⬅️ AI RESPONSE STATUS: {} {}", response.getStatusCode(),

response.getStatusText());

logger.info("📋 HEADERS: {}", response.getHeaders());

String responseBody = new String(response.getBody().readAllBytes(), StandardCharsets.UTF_8);

logger.info("📥 BODY: {}", responseBody.trim());

}

// This wrapper class allows us to read the response body twice

private static class BufferedResponseWrapper implements ClientHttpResponse {

private final ClientHttpResponse original;

private final byte[] body;

public BufferedResponseWrapper(ClientHttpResponse response) throws IOException {

this.original = response;

this.body = StreamUtils.copyToByteArray(response.getBody());

}

@Override

public InputStream getBody() {

return new ByteArrayInputStream(body);

}

@Override

public HttpStatusCode getStatusCode() throws IOException {

return original.getStatusCode();

}

@Override

public int getRawStatusCode() throws IOException {

return original.getRawStatusCode();

}

@Override

public String getStatusText() throws IOException {

return original.getStatusText();

}

@Override

public org.springframework.http.HttpHeaders getHeaders() {

return original.getHeaders();

}

@Override

public void close() {

original.close();

}

}

}

What this code does:

- Creates a custom class that implements Spring’s

ClientHttpRequestInterceptor intercept()method logs both request and responselogAIRequest()formats and logs request detailslogAIResponse()formats and logs response details- Uses emojis to make logs more readable

BufferedResponseWrapperlets us read the response body twice (once for logging, once for the application)

Step 2: Update your configuration class

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.boot.web.client.RestClientCustomizer;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class ChatClientConfig {

@Bean

public ChatClient chatClient(ChatClient.Builder chatClientBuilder) {

return chatClientBuilder.build();

}

@Bean

public RestClientCustomizer aiLoggingRestClientCustomizer() {

return restClientBuilder -> restClientBuilder.requestInterceptor(new AIRequestLogInterceptor());

}

}

What this code does:

- Creates a

RestClientCustomizerbean that addsAIRequestLogInterceptorto every RestClient - The interceptor will log all HTTP requests and responses

- This works because Spring AI’s ChatClient uses RestClient internally

Step 3: Verify the output

With this approach, you’ll see beautifully formatted logs like:

# Request Related Logs

2025-04-05T23:46:15.886+05:30 INFO 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] AI-Communication-Logger : ➡️ AI REQUEST: POST https://openrouter.ai/api/v1/chat/completions

2025-04-05T23:46:15.887+05:30 INFO 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] AI-Communication-Logger : 📋 HEADERS: [Authorization:"Bearer XXXXX", Content-Type:"application/json", Content-Length:"139"]

2025-04-05T23:46:15.888+05:30 INFO 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] AI-Communication-Logger : 📤 BODY: {"messages":[{"content":"hey how are you?","role":"user"}],"model":"google/gemini-2.0-flash-exp:free","stream":false,"temperature":0.7}

# Response Related Logs

2025-04-05T23:46:20.872+05:30 INFO 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] AI-Communication-Logger : ⬅️ AI RESPONSE STATUS: 200 OK OK

2025-04-05T23:46:20.876+05:30 INFO 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] AI-Communication-Logger : 📋 HEADERS: [:status:"200", access-control-allow-origin:"*", cf-ray:"92bb091cb8e83f33-BOM", content-type:"application/json", date:"Sat, 05 Apr 2025 18:16:16 GMT", server:"cloudflare", x-clerk-auth-message:"Invalid JWT form. A JWT consists of three parts separated by dots. (reason=token-invalid, token-carrier=header)", x-clerk-auth-reason:"token-invalid", x-clerk-auth-status:"signed-out"]

2025-04-05T23:46:20.877+05:30 INFO 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] AI-Communication-Logger : 📥 BODY: {"error":{"message":"Provider returned error","code":429,"metadata":{"raw":"{\n \"error\": {\n \"code\": 429,\n \"message\": \"You exceeded your current quota, please check your plan and billing details. For more information on this error, head to: https://ai.google.dev/gemini-api/docs/rate-limits.\",\n \"status\": \"RESOURCE_EXHAUSTED\",\n \"details\": [\n {\n \"@type\": \"type.googleapis.com/google.rpc.QuotaFailure\",\n \"violations\": [\n {\n \"quotaMetric\": \"generativelanguage.googleapis.com/generate_content_paid_tier_input_token_count\",\n \"quotaId\": \"GenerateContentPaidTierInputTokensPerModelPerMinute\",\n \"quotaDimensions\": {\n \"model\": \"gemini-2.0-pro-exp\",\n \"location\": \"global\"\n },\n \"quotaValue\": \"10000000\"\n }\n ]\n },\n {\n \"@type\": \"type.googleapis.com/google.rpc.Help\",\n \"links\": [\n {\n \"description\": \"Learn more about Gemini API quotas\",\n \"url\": \"https://ai.google.dev/gemini-api/docs/rate-limits\"\n }\n ]\n },\n {\n \"@type\": \"type.googleapis.com/google.rpc.RetryInfo\",\n \"retryDelay\": \"41s\"\n }\n ]\n }\n}\n","provider_name":"Google AI Studio"}},"user_id":"user_XX"}

2025-04-05T23:46:20.916+05:30 WARN 11664 --- [spring-boot-ai-logging-demo] [nio-8080-exec-2] o.s.ai.openai.OpenAiChatModel : No choices returned for prompt: Prompt{messages=[UserMessage{content='hey how are you?', properties={messageType=USER}, messageType=USER}], modelOptions=OpenAiChatOptions: {"streamUsage":false,"model":"google/gemini-2.0-flash-exp:free","temperature":0.7}}

Pros of this approach:

- Complete control over what and how you log

- Custom formatting for better readability

- Can add special handling for common errors

- Can be extended to add alerts or special behaviors

Cons:

- Requires writing more code

- Need to understand HTTP client interceptors

- Might need updates when Spring Boot versions change

8. Which Approach Should You Use?

After trying all three approaches, here’s my recommendation:

- 👶 Beginner or just want to see prompts/responses? Use SimpleLoggerAdvisor

- 🧰 Want detailed HTTP logs without writing code? Go with Logbook

- ⚙️ Need full customization or special logging behavior? Write a custom interceptor

All of them are great — it just depends on how much control you need.

9. Source Code

You can find the complete example implementations discussed in this blog on our GitHub:

👉 Spring AI Logging Demo: View on GitHub

This repository contains three different branches, each demonstrating a unique logging approach:

main: Logging using a custom interceptor viaRestClientCustomizer.simple-logger-advisor-logging: Logging using Spring AI’s built-inSimpleLoggerAdvisor.logbook-logging: Logging using Logbook, a third-party HTTP logger.

10. Things to Consider

When implementing logging for your AI applications, keep these points in mind:

- Log size: AI responses can be very large. Consider truncating them in logs.

- Sensitive information: Be careful not to log API keys or user-sensitive information.

- Performance: Extensive logging can impact performance.

- Log rotation: Set up proper log rotation so your logs don’t fill up your disk.

- Production vs Development: Consider having more detailed logs in development and fewer in production.

11. FAQs

Why is logging important for AI applications?

AI services can fail silently or return vague errors that your app might not catch clearly. With proper logging, you get full visibility into the actual requests and responses, making it easier to debug issues when things don’t work as expected.

Can I use these logging techniques with other AI providers?

Yes. All approaches hook into the underlying HTTP client (ChatClient or RestClient), so they work with any provider supported by Spring AI (OpenAI, Azure, Ollama, etc.).

What about logging in production environments?

For production, consider logging only errors or warnings, not every request and response.

Can I log AI responses conditionally, only on errors?

Yes! If you’re using a custom interceptor, you can check the response status or body and log only if an error occurs. This helps keep logs clean in production.

12. Conclusion

When working with AI models in Spring Boot, having proper logging in place makes a huge difference. It helps you clearly see what’s being sent to the AI and what’s coming back, just like listening to both sides of a conversation. This visibility is key when something breaks or doesn’t behave as expected. Instead of guessing what went wrong, you’ll have the full picture in your logs, making it much easier to debug and fix issues quickly. Adding clear and detailed logging early on can save you hours of frustration later and make your development experience with Spring AI much smoother.

13. Learn More

Interested in learning more?

Integrating Google Gemini with Spring AI: Free LLM Access for Your POC

Great post! I needed a quick log solution for springai debugging and your blog is the only place that suggested just using zalando. Minimally invasive and gets the job done. Much appreciated.