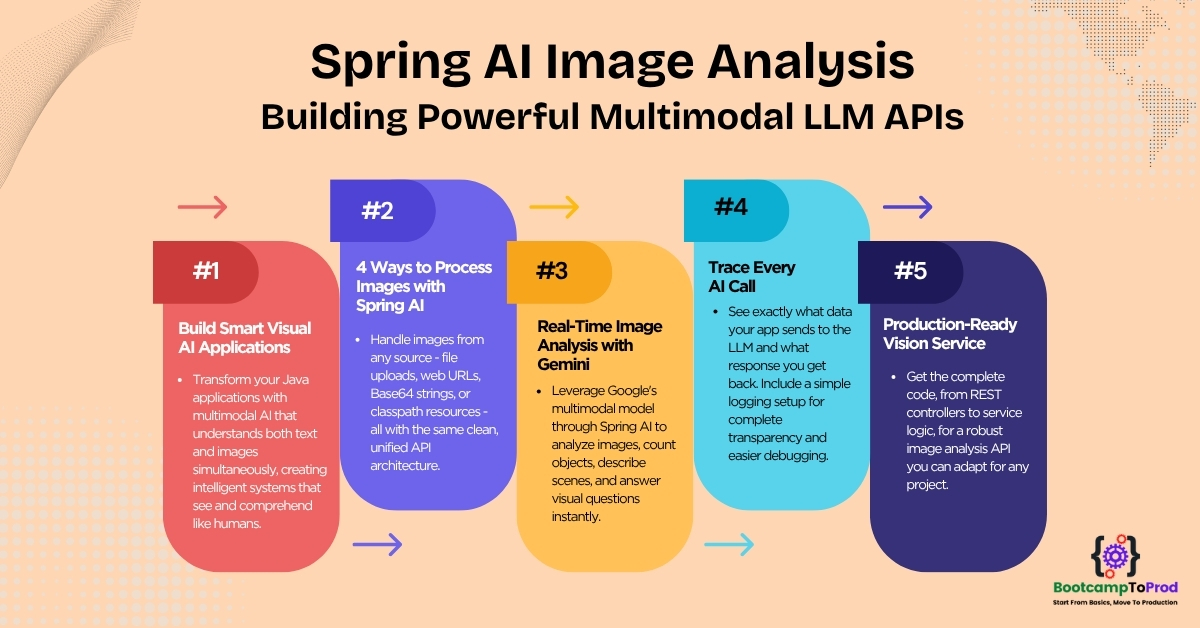

Discover the Spring AI Image Analysis cookbook for building a powerful, multimodal LLM‑driven image analysis API.

1. Introduction

Imagine building an application that can look at images and tell you what’s inside them, just like having a smart assistant that never gets tired of answering “What do you see in this picture?” That’s exactly what we’re going to build today using Spring AI Image Analysis – a powerful image analysis service that can process images from multiple sources and provide intelligent insights.

2. The Magic of Multimodality: The Foundation of Smart AI

Before diving into code, let’s understand what makes our image analysis possible: multimodality.

Think about how humans process information. When you look at a photo, you don’t just see colors and shapes – you understand context, recognize objects, and can describe what’s happening. You’re processing visual information alongside your existing knowledge (text-based understanding) simultaneously. This is multimodality in action.

Traditional machine learning approached this differently. We had separate models for different tasks – one model for speech recognition, another for image classification, and yet another for text processing. It was like having specialists who could only do one thing at a time.

Modern multimodal AI models, however, work more like the human brain. Models like OpenAI’s GPT, Google’s Gemini, and Anthropic’s Claude can process text, images, audio, and even video together, creating responses that consider all these inputs simultaneously.

3. How Our Multimodal Spring AI Image Analysis Works

So, how do we actually package our text and images together for the AI and get a response? The process is a clear request-response cycle, which Spring AI makes incredibly intuitive to implement.

- Input Collection: We accept both text prompts and images from various sources (files, URLs, Base64, or classpath resources).

- Prompt Building: We combine the user’s text query with the image media objects into a unified prompt.

- Spring AI Processing: The ChatClient handles the communication between our application and the multimodal LLM.

- AI Analysis: Gemini 2.0 Flash processes both text and images simultaneously to understand context and content.

- Intelligent Response: The AI returns meaningful insights that consider both the visual content and the user’s specific question.

This entire flow, from user input to final answer, is illustrated below:

The key insight here is that we’re not just sending images to the AI – we’re sending contextual requests. For example:

- Text: “Count the number of people in these images.”

- Images: [photo1.jpg, photo2.jpg]

- AI Response: “I can see 3 people in the first image and 2 people in the second image, totaling 5 people across both images.”

This is the power of multimodality – the AI understands both what you’re asking (text) and what you’re showing (images) together.

4. The Simplicity Behind the Magic: Core Implementation

Conceptually, achieving multimodal AI image analysis using Spring AI is remarkably clean and straightforward. The entire process boils down to three essential steps:

- Convert any image to a Spring AI Media object – regardless of source (file, URL, Base64, classpath)

- Create a user prompt combining text and media – Spring AI handles the complexity

- Call the LLM and extract the response – the multimodal model does the heavy lifting

Here’s the core code that makes it all happen:

// Step 1: Wrap your image as a Spring AI Media object.

//

// The Media constructor expects two arguments:

// 1. A MimeType (e.g., MimeTypeUtils.IMAGE_PNG)

// 2. A Resource (which you can obtain from various sources:

// – ClassPathResource for files in your resources folder

// – UrlResource for images at external URLs

// – MultipartFile(...) for uploaded files

// – ByteArrayResource after Base64 decoding, etc.)

//

// Below is one example loading a PNG from the classpath:

Media imageMedia = new Media(MimeTypeUtils.IMAGE_PNG, new ClassPathResource("test.png"));

// Steps 2 & 3: Build prompt, call LLM, get response

return chatClient.prompt()

.user(userSpec -> userSpec

.text("Explain what do you see in this picture?") // Text input

.media(imageMedia)) // Visual input

.call()

.content();

That’s it! This simple pattern works regardless of image source:

- Classpath:

new Media(mimeType, new ClassPathResource("image.png")) - File Upload:

new Media(mimeType, multipartFile.getResource()) - URL:

new Media(mimeType, new UrlResource("https://example.com/image.jpg")) - Base64:

new Media(mimeType, new ByteArrayResource(decodedBytes))

The beauty is that once you have a Media object, the rest of the code remains identical. Spring AI abstracts away all the complexity of multimodal communication, leaving you with clean, readable code that focuses on business logic rather than technical intricacies.

5. Real-World Application: The Universal Image Analyst API

We will build a Spring Boot application that exposes a REST API for image analysis. To make it a truly useful “cookbook” example, our API will be able to accept images in four different, common formats:

- From the Classpath: An image file stored directly within our application’s resources.

- File Uploads: One or more images sent as multipart/form-data, a standard for file uploads in web applications.

- From Web URLs: A list of public URLs pointing to images on the internet.

- Base64 Encoded Strings: Images encoded as text strings, often used in JSON payloads.

For any of these inputs, the user will also provide a text prompt (a question or instruction), and our service will return the AI’s analysis.

⚙️ Project Structure & Setup

Below is the folder structure of our Spring Boot application:

spring-ai-image-analysis-cookbook

├── src

│ └── main

│ ├── java

│ │ └── com

│ │ └──bootcamptoprod

│ │ ├── controller

│ │ │ └── ImageAnalysisController.java

│ │ ├── service

│ │ │ └── ImageAnalysisService.java

│ │ ├── dto

│ │ │ └── UrlAnalysisRequest.java

│ │ │ └── Base64Image.java

│ │ │ └── Base64ImageAnalysisRequest.java

│ │ │ └── ImageAnalysisResponse.java

│ │ ├── exception

│ │ │ └── ImageProcessingException.java

│ │ ├── SpringAIImageAnalysisCookbookApplication.java

│ └── resources

│ └── application.yml

│ └── images

│ └──Spring-AI-Chat-Client-Metrics.jpg

│ └──Spring-AI-Observability.jpg

└── pom.xml

Understanding the Project Structure

Here is a quick breakdown of the key files in our project and what each one does:

- SpringAIImageAnalysisCookbookApplication.java: The main entry point that bootstraps and starts the entire Spring Boot web application.

- ImageAnalysisController.java: Exposes the four REST API endpoints (

/from-classpath,/from-files,/from-urls,/from-base64) to handle incoming image analysis requests. - ImageAnalysisService.java: Contains the core business logic, converting various inputs into Media objects and using the ChatClient to communicate with the AI model.

- UrlAnalysisRequest.java, Base64Image.java, Base64ImageAnalysisRequest.java, ImageAnalysisResponse.java: Simple record classes (DTOs) that define the JSON structure for our API’s requests and responses.

- ImageProcessingException.java: A custom exception used for handling specific errors like invalid prompts or failed image downloads, resulting in clear HTTP 400 responses.

- application.yml: Configures the application, including the crucial connection details for the AI model provider (API key, model name, etc.).

- images/*.jpg: Example image files stored in the application’s resources, ready to be used by the

/from-classpathendpoint. - pom.xml: Declares all the necessary Maven dependencies for Spring Web, the Spring AI OpenAI starter, and other required libraries.

Let’s set up our project with the necessary dependencies and configurations.

Step 1: Add Maven Dependencies

Add the below dependencies to pom.xml file.

<properties>

<java.version>21</java.version>

<spring-ai.version>1.0.0</spring-ai.version>

</properties>

<dependencies>

<!-- Spring Boot Web for building RESTful web services -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- OpenAI Model Support – configureable for various AI providers (e.g. OpenAI, Google Gemini) -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>

<!-- For Logging Spring AI calls -->

<dependency>

<groupId>org.zalando</groupId>

<artifactId>logbook-spring-boot-starter</artifactId>

<version>3.12.2</version>

</dependency>

<dependencyManagement>

<dependencies>

<!-- Spring AI bill of materials to align all spring-ai versions -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

In this configuration:

spring-boot-starter-web:To create our RESTful API endpoint.spring-ai-starter-model-openai:The Spring AI starter for OpenAI-compatible models. We’ll use it with Google Gemini.logbook-spring-boot-starter: A handy library for logging HTTP requests and responses. It’s great for debugging.spring-ai-bom:This Spring AI Bill of Materials (BOM), located in the<dependencyManagement>section, simplifies our setup. It manages the versions of all Spring AI modules, ensuring they are compatible and preventing potential conflicts with library versions.

Step 2: Configure Application Properties

Next, we configure our application to connect to the AI model.

spring:

application:

name: spring-ai-image-analysis-cookbook

# AI configurations

ai:

openai:

api-key: ${GEMINI_API_KEY}

base-url: https://generativelanguage.googleapis.com/v1beta/openai

chat:

completions-path: /chat/completions

options:

model: gemini-2.0-flash-exp

logging:

level:

org.zalando.logbook.Logbook: TRACE

📄 Configuration Overview

- We’re using Google’s Gemini model through OpenAI-compatible API endpoints

- The API key is externalized using environment variables for security

- Logging is set to TRACE level to see HTTP traffic going to Google Gemini

Step 3: Application Entry Point

Now, let’s define the main class that boots our Spring Boot app.

package com.bootcamptoprod;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.web.client.RestClientCustomizer;

import org.springframework.context.annotation.Bean;

import org.zalando.logbook.Logbook;

import org.zalando.logbook.spring.LogbookClientHttpRequestInterceptor;

@SpringBootApplication

public class SpringAIImageAnalysisCookbookApplication {

public static void main(String[] args) {

SpringApplication.run(SpringAIImageAnalysisCookbookApplication.class, args);

}

@Bean

public RestClientCustomizer restClientCustomizer(Logbook logbook) {

return restClientBuilder -> restClientBuilder.requestInterceptor(new LogbookClientHttpRequestInterceptor(logbook));

}

}Explanation:

- Main Class to Run the Application:

SpringAIImageAnalysisCookbookApplicationis the starting point of our application. When you run this class, Spring Boot initializes all components and starts the embedded server. - HTTP Logging: The

RestClientCustomizerbean adds HTTP logging to all REST client calls, helping us debug AI model interactions

Step 4: Create Data Transfer Objects (DTOs)

Before diving into the service logic, let’s understand our data contracts. These simple Java record classes define the structure of the JSON data our API will send and receive.

/**

* Represents a single image encoded as a Base64 string, including its MIME type.

*/

public record Base64Image(

String mimeType,

String data // The Base64 encoded string

) {}

/**

* Defines the API request body for analyzing one or more Base64 encoded images.

*/

public record Base64ImageAnalysisRequest(

List<Base64Image> images,

String prompt

) {}

/**

* Defines the API request body for analyzing images from URLs or a single classpath file.

*/

public record UrlAnalysisRequest(

List<String> imageUrls,

String prompt,

String fileName

) {}

/**

* Represents the final text response from the AI model, sent back to the client.

*/

public record ImageAnalysisResponse(

String response

) {}Explanation:

- Base64Image: Represents a single image provided as a Base64 encoded string along with its MIME type.

- Base64ImageAnalysisRequest: Defines the request payload for analyzing a list of Base64 encoded images with a single text prompt.

- UrlAnalysisRequest: Defines the request payload for analyzing images from a list of URLs or a single file from the classpath, along with a text prompt.

- ImageAnalysisResponse: A simple record that wraps the final text analysis received from the AI model for all API responses.

Step 5: The Controller Layer: API Endpoints

Our controller exposes four endpoints, each handling different image input methods:

package com.bootcamptoprod.controller;

import com.bootcamptoprod.dto.Base64ImageAnalysisRequest;

import com.bootcamptoprod.dto.ImageAnalysisResponse;

import com.bootcamptoprod.dto.UrlAnalysisRequest;

import com.bootcamptoprod.exception.ImageProcessingException;

import com.bootcamptoprod.service.ImageAnalysisService;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.ExceptionHandler;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.multipart.MultipartFile;

import java.util.List;

@RestController

@RequestMapping("/api/v1/image/analysis")

public class ImageAnalysisController {

private final ImageAnalysisService imageAnalysisService;

public ImageAnalysisController(ImageAnalysisService imageAnalysisService) {

this.imageAnalysisService = imageAnalysisService;

}

/**

* SCENARIO 1: Analyze a single file from the classpath (e.g., src/main/resources/images).

*/

@PostMapping("/from-classpath")

public ResponseEntity<ImageAnalysisResponse> analyzeFromClasspath(@RequestBody UrlAnalysisRequest request) {

ImageAnalysisResponse imageAnalysisResponse = imageAnalysisService.analyzeImageFromClasspath(request.fileName(), request.prompt());

return ResponseEntity.ok(imageAnalysisResponse);

}

/**

* SCENARIO 2: Analyze multiple image files uploaded by the user.

* This endpoint handles multipart/form-data requests.

*/

@PostMapping("/from-files")

public ResponseEntity<ImageAnalysisResponse> analyzeFromMultipart(@RequestParam("images") List<MultipartFile> images, @RequestParam("prompt") String prompt) {

ImageAnalysisResponse imageAnalysisResponse = imageAnalysisService.analyzeImagesFromMultipart(images, prompt);

return ResponseEntity.ok(imageAnalysisResponse);

}

/**

* SCENARIO 3: Analyze multiple images from a list of URLs provided in a JSON body.

*/

@PostMapping("/from-urls")

public ResponseEntity<ImageAnalysisResponse> analyzeFromUrls(@RequestBody UrlAnalysisRequest request) {

ImageAnalysisResponse imageAnalysisResponse = imageAnalysisService.analyzeImagesFromUrls(request.imageUrls(), request.prompt());

return ResponseEntity.ok(imageAnalysisResponse);

}

/**

* SCENARIO 4: Analyze multiple images from Base64-encoded strings in a JSON body.

*/

@PostMapping("/from-base64")

public ResponseEntity<ImageAnalysisResponse> analyzeFromBase64(@RequestBody Base64ImageAnalysisRequest request) {

ImageAnalysisResponse imageAnalysisResponse = imageAnalysisService.analyzeImagesFromBase64(request.images(), request.prompt());

return ResponseEntity.ok(imageAnalysisResponse);

}

/**

* Centralized exception handler for this controller.

* Catches our custom exception from the service layer and returns a clean

* HTTP 400 Bad Request with the error message.

*/

@ExceptionHandler(ImageProcessingException.class)

public ResponseEntity<ImageAnalysisResponse> handleImageProcessingException(ImageProcessingException ex) {

return ResponseEntity.badRequest().body(new ImageAnalysisResponse(ex.getMessage()));

}

}

The ImageAnalysisController acts as the entry point for all incoming web requests. It defines the specific URLs (endpoints) for our four different image analysis scenarios and delegates the heavy lifting to the ImageAnalysisService.

Here is a breakdown of its responsibilities:

- /from-classpath: Accepts a JSON request to analyze a single image file located within the application’s resources folder.

- /from-files: Handles multipart/form-data requests, allowing users to upload one or more image files for analysis along with a prompt.

- /from-urls: Processes a JSON request containing a list of public image URLs, downloading and analyzing each one against the user’s prompt.

- /from-base64: Accepts a JSON payload with a list of Base64-encoded image strings, making it easy to send image data directly in the request body.

- @ExceptionHandler: Acts as a centralized error gateway, catching our custom

ImageProcessingExceptionand returning a user-friendly HTTP 400 Bad Request with a clear error message.

Step 6: Custom Exception Handling

To handle predictable errors like invalid URLs or empty prompts gracefully, we use a dedicated custom exception.

package com.bootcamptoprod.exception;

import org.springframework.http.HttpStatus;

import org.springframework.web.bind.annotation.ResponseStatus;

// Custom exception that will result in an HTTP 400 Bad Request response

@ResponseStatus(HttpStatus.BAD_REQUEST)

public class ImageProcessingException extends RuntimeException {

public ImageProcessingException(String message) {

super(message);

}

public ImageProcessingException(String message, Throwable cause) {

super(message, cause);

}

}

Explanation:

This simple class is a powerful tool for creating clean and predictable REST APIs.

- Here, we are creating an unchecked exception, which keeps our service layer code clean and focused on its primary logic.

- The key is the

@ResponseStatus(HttpStatus.BAD_REQUEST)annotation from Spring. This tells the Spring Framework that whenever this exception is thrown and not caught by a more specific handler, it should automatically stop processing and send an HTTP 400 Bad Request response to the client. This is the perfect status code for user-input errors, such as providing an invalid image URL or an empty prompt. - In our

ImageAnalysisController, we also have a specific@ExceptionHandlerfor this type. This gives us the best of both worlds:@ResponseStatusprovides a sensible default, while our handler allows us to customize the exact JSON response body, ensuring the user gets a clear and helpful error message.

Step 7: The Heart of the Application: Service Implementation

Now let’s explore the core service that handles all the image analysis logic. This is where the multimodal AI magic happens, transforming images from various sources into intelligent insights.

@Service

public class ImageAnalysisService {

private static final Logger log = LoggerFactory.getLogger(ImageAnalysisService.class);

// A single, reusable system prompt that defines the AI's persona and rules.

private static final String SYSTEM_PROMPT_TEMPLATE = getSystemPrompt();

// A constant to programmatically check if the AI followed our rules.

private static final String AI_ERROR_RESPONSE = "Error: I can only analyze images and answer related questions.";

private final ChatClient chatClient;

// The ChatClient.Builder is injected by Spring, allowing us to build the client.

public ImageAnalysisService(ChatClient.Builder chatClientBuilder) {

this.chatClient = chatClientBuilder.build();

}

/**

* System prompt that defines the AI's behavior and boundaries.

*/

private static String getSystemPrompt() {

return """

You are an AI assistant that specializes in image analysis.

Your task is to analyze the provided image(s) and answer the user's question about them.

If the user's prompt is not related to analyzing the image(s),

respond with the exact phrase: 'Error: I can only analyze images and answer related questions.'

""";

}

}

Explanation:

- ChatClient: This is the central interface from Spring AI that we use to communicate with the Large Language Model (LLM). We inject the

ChatClient.Builderin the constructor to create our client instance. - SYSTEM_PROMPT_TEMPLATE: This is a powerful feature. A system prompt provides high-level instructions that guide the AI’s behavior for the entire conversation. Here, we are telling our AI two critical things:

- Its job is to be an “image analysis specialist,”

- If the user asks something unrelated to the images, it must reply with a specific error phrase. This acts as a “guardrail” to keep the AI on task.

- AI_ERROR_RESPONSE: We store the exact error phrase from our system prompt in a constant. This allows us to programmatically check the AI’s response and handle cases where the user’s prompt was out of scope.

Step 7.1: Core Analysis Method: The Multimodal AI Communication Hub

All roads lead to this private method. No matter how the images are provided (file, URL, etc.), they are eventually passed to performAnalysis. This method is responsible for the actual multimodal communication with the AI.

/**

* This is the CORE method that communicates with the AI.

* It is called by all the public service methods.

*/

private ImageAnalysisResponse performAnalysis(String prompt, List<Media> mediaList) {

if (mediaList.isEmpty()) {

throw new ImageProcessingException("No valid images were provided for analysis.");

}

// This is where the magic happens: combining text and media in one call.

String response = chatClient.prompt()

.system(SYSTEM_PROMPT_TEMPLATE)

.user(userSpec -> userSpec

.text(prompt) // The user's text question

.media(mediaList.toArray(new Media[0]))) // The list of images

.call()

.content();

// Check if the AI responded with our predefined error message.

if (AI_ERROR_RESPONSE.equalsIgnoreCase(response)) {

throw new ImageProcessingException("The provided prompt is not related to image analysis.");

}

return new ImageAnalysisResponse(response);

}Understanding the multimodal communication flow:

- System Instructions (

.system(SYSTEM_PROMPT_TEMPLATE)): Defines the AI’s role and behavior boundaries - User Specification: Combines both text and visual inputs:

.text(prompt): The user’s question or instruction in natural language.media(mediaList.toArray()): Array of images converted to Spring AI Media objects

- AI Processing: The model processes text and images simultaneously, understanding context across modalities

- Response Validation: Checks if the AI stayed within its defined scope

- Structured Return: Wraps the response in our DTO for consistent API responses

The beauty of this approach is that regardless of how the images arrived (file upload, URL, Base64, or classpath), they all get converted to the same Media object format, making the AI communication completely uniform.

Step 7.2: Scenario 1: Analyzing an Image from the Classpath (Resources Folder)

This scenario is ideal for analyzing images that are bundled with the application, such as for demos, tests, or default assets.

@Override

public ImageAnalysisResponse analyzeImageFromClasspath(String fileName, String prompt) {

validatePrompt(prompt);

if (!StringUtils.hasText(fileName)) {

throw new ImageProcessingException("File name cannot be empty.");

}

// Assumes images are in `src/main/resources/images/`

Resource imageResource = new ClassPathResource("images/" + fileName);

if (!imageResource.exists()) {

throw new ImageProcessingException("File not found in classpath: images/" + fileName);

}

// We assume JPEG for this example, but you could determine this dynamically.

Media imageMedia = new Media(MimeTypeUtils.IMAGE_JPEG, imageResource);

// Call the core analysis method with a list containing our single image.

return performAnalysis(prompt, List.of(imageMedia));

}

Key Details:

- Validate Input: It first ensures the prompt and fileName are not empty.

- Find Resource: It uses Spring’s

ClassPathResourceto locate the image file within thesrc/main/resources/images/directory of your project. - Check Existence: It calls

.exists()to make sure the file was actually found, throwing an error if not. - Create Media: It wraps the Resource in a Spring AI Media object, specifying its MIME type.

- Perform Analysis: It calls the central

performAnalysismethod, wrapping the single Media object in a List.

Step 7.3: Scenario 2: Analyzing Uploaded Image Files (Multipart)

This is the most common scenario for web applications, allowing users to upload images directly from their devices.

@Override

public ImageAnalysisResponse analyzeImagesFromMultipart(List<MultipartFile> files, String prompt) {

validatePrompt(prompt);

if (files == null || files.isEmpty() || files.stream().allMatch(MultipartFile::isEmpty)) {

throw new ImageProcessingException("Image files list cannot be empty.");

}

List<Media> mediaList = files.stream()

.filter(file -> !file.isEmpty())

.map(this::convertMultipartFileToMedia)

.collect(Collectors.toList());

return performAnalysis(prompt, mediaList);

}

/**

* Logic for converting an uploaded MultipartFile into a Spring AI Media object.

*/

private Media convertMultipartFileToMedia(MultipartFile file) {

// Determine the image's MIME type from the file upload data.

String contentType = file.getContentType();

MimeType mimeType = determineMimeType(contentType);

// Create a new Media object using the detected MIME type and the file's resource.

return new Media(mimeType, file.getResource());

}

/**

* Helper method to determine MimeType from a content type string.

*/

private MimeType determineMimeType(String contentType) {

if (contentType == null) {

return MimeTypeUtils.IMAGE_PNG; // Default fallback if type is unknown

}

return switch (contentType.toLowerCase()) {

case "image/jpeg", "image/jpg" -> MimeTypeUtils.IMAGE_JPEG;

case "image/png" -> MimeTypeUtils.IMAGE_PNG;

case "image/gif" -> MimeTypeUtils.IMAGE_GIF;

case "image/webp" -> MimeType.valueOf("image/webp");

case "image/bmp" -> MimeType.valueOf("image/bmp");

case "image/tiff" -> MimeType.valueOf("image/tiff");

default -> MimeTypeUtils.IMAGE_PNG; // Default fallback

};

}

Key Details:

- Process List: The

analyzeImagesFromMultipartmethod takes aList<MultipartFile>. It uses a Java Stream for efficient processing. - Filter and Map: It first filters out any empty file parts and then uses

.map()to call theconvertMultipartFileToMediahelper for each valid file. - Convert Helper: The

convertMultipartFileToMediamethod gets the content type (e.g., “image/png”) from the uploaded file. - Determine MIME Type: It passes the content type string to

determineMimeType, which uses a switch expression to return a properMimeTypeobject. - Create Media: It then creates the Media object using the file’s Resource and the determined

MimeType. - Collect and Analyze: The stream collects all the created Media objects into a List, which is then sent to the central

performAnalysismethod.

Step 7.4: Scenario 3: Analyzing Images from Web URLs

This scenario is perfect for analyzing images that already exist on the internet without needing to upload them first.

@Override

public ImageAnalysisResponse analyzeImagesFromUrls(List<String> urls, String prompt) {

validatePrompt(prompt);

if (urls == null || urls.isEmpty()) {

throw new ImageProcessingException("Image URL list cannot be empty.");

}

List<Media> mediaList = urls.stream()

.map(this::convertUrlToMedia)

.collect(Collectors.toList());

return performAnalysis(prompt, mediaList);

}

/**

* Logic for downloading an image from a URL and converting it into a Media object.

*/

private Media convertUrlToMedia(String imageUrl) {

try {

log.info("Downloading image from URL: {}", imageUrl);

URL url = new URL(imageUrl);

URLConnection connection = url.openConnection();

connection.setConnectTimeout(5000); // 5-second connection timeout

connection.setReadTimeout(5000); // 5-second read timeout

String mimeType = connection.getContentType(); // Get MIME type from response headers

if (mimeType == null || !mimeType.startsWith("image/")) {

throw new ImageProcessingException("Invalid or non-image MIME type for URL: " + imageUrl);

}

Resource imageResource = new UrlResource(imageUrl);

return new Media(MimeType.valueOf(mimeType), imageResource);

} catch (Exception e) {

throw new ImageProcessingException("Failed to download or process image from URL: " + imageUrl, e);

}

}Key Details:

- Process URLs: The public method streams the list of URL strings and maps each one to the

convertUrlToMediahelper. - Establish Connection: The helper method creates a

URLConnectionto the image URL. Crucially, it sets timeouts to prevent the application from hanging indefinitely if a remote server is slow or unresponsive. - Get MIME Type: It inspects the

Content-Typeheader from the server’s HTTP response. This is a reliable way to check if the URL points to an actual image before downloading the whole file. - Create Resource: It creates a

UrlResource, a special Spring Resource that represents data at a URL. Spring AI handles the lazy-loading of the data when it’s needed. - Error Handling: The entire block is wrapped in a try-catch to handle Exception (e.g., network errors, 404 Not Found), converting them into our user-friendly

ImageProcessingException.

Step 7.5: Scenario 4: Analyzing Base64 Encoded Images

This method is useful for APIs where the client sends image data directly inside a JSON payload, a common pattern for mobile or single-page applications.

@Override

public ImageAnalysisResponse analyzeImagesFromBase64(List<Base64Image> base64Images, String prompt) {

validatePrompt(prompt);

if (base64Images == null || base64Images.isEmpty()) {

throw new ImageProcessingException("Base64 image list cannot be empty.");

}

List<Media> mediaList = base64Images.stream()

.map(this::convertBase64ToMedia)

.collect(Collectors.toList());

return performAnalysis(prompt, mediaList);

}

/**

* Logic for decoding a Base64 string into a Media object.

*/

private Media convertBase64ToMedia(Base64Image base64Image) {

if (!StringUtils.hasText(base64Image.mimeType()) || !StringUtils.hasText(base64Image.data())) {

throw new ImageProcessingException("Base64 image data and MIME type cannot be empty.");

}

try {

// Decode the Base64 string back into its original binary format.

byte[] decodedBytes = Base64.getDecoder().decode(base64Image.data());

// Wrap the byte array in a resource and create the Media object.

return new Media(MimeType.valueOf(base64Image.mimeType()), new ByteArrayResource(decodedBytes));

} catch (Exception e) {

throw new ImageProcessingException("Invalid Base64 data provided.", e);

}

}Key Details:

- Process List: Similar to the other scenarios, this method streams the list of

Base64Imageobjects and maps them to theconvertBase64ToMediahelper. - Decode Data: The helper’s primary job is to decode the Base64 text string back into its raw binary data (a byte[] array).

- Wrap in Resource: It then uses Spring’s

ByteArrayResourceto wrap this byte array. This is an efficient, in-memory implementation of the Resource interface. - Create Media: It creates the Media object using the MIME type provided in the request and the ByteArrayResource.

- Error Handling: The decoding process is wrapped in a try-catch block to handle any malformed

Base64data gracefully.

6. Testing the Application

Once the application is started, you can test each of the four endpoints using a command-line tool like cURL, or you can also test using Postman. Here are example requests for each scenario.

1. Analyze an Image from the Classpath

This endpoint uses one of the images bundled inside the application’s src/main/resources/images folder.

curl -X POST http://localhost:8080/api/v1/image/analysis/from-classpath \

-H "Content-Type: application/json" \

-d '{

"fileName": "Spring-AI-Observability.jpg",

"prompt": "What do you understand from this image?"

}'2. Analyze an Uploaded Image

This endpoint accepts a standard file upload. Make sure to replace /path/to/your/imageX.jpg with the actual path to image files on your computer.

curl -X POST 'localhost:8080/api/v1/image/analysis/from-files?prompt=Is there any similarity in these images?' \

--form 'images=@/path/to/your/image1.jpg' \

--form 'images=@/path/to/your/image2.jpg'3. Analyze an Image from a URL

You can use any publicly accessible image URL for this endpoint.

curl -X POST http://localhost:8080/api/v1/image/analysis/from-urls \

-H "Content-Type: application/json" \

-d '{

"imageUrls": ["https://www.abc.com/some_valid_image_path.jpg"],

"prompt": "What colors are used in this image?"

}'4. Analyze a Base64-Encoded Image

For this request, you need to provide the image data as a Base64 text string. You’ll need to replace the value of “data” with your own encoded image.

Tip: On macOS or Linux, you can easily generate a Base64 string and copy it to your clipboard with the command: base64 -i your_image.jpg | pbcopy

curl -X POST http://localhost:8080/api/v1/image/analysis/from-base64 \

-H "Content-Type: application/json" \

-d '{

"images": [{

"mimeType": "image/jpeg",

"data": "/9j/4AAQSkZJRgABAQEAYABgAAD/2wBDAAIBAQIBAQICAgICAgICAwUDAwMDAwYEBAMFBwYHBwcGBwcICQsJCAgKCAcHCg0KCgsMDAwMBwkODw0MDgsMDAz/2wBDAQICAgMDAwYDAwYMCAcIDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAwMDAz/wAARCAABAPADASIAAhEBAxEB/8QAFQABAQAAAAAAAAAAAAAAAAAAAAj/xAAUEAEAAAAAAAAAAAAAAAAAAAAA/8QAFQEBAQAAAAAAAAAAAAAAAAAABgj/xAAUEAEAAAAAAAAAAAAAAAAAAAAA/9oADAMBAAIRAxEAPwCDAAn//2Q=="

}],

"prompt": "Extract text from this image"

}'Output:

7. Peeking Under the Hood – Logging HTTP Calls to the LLM

When developing AI-powered applications, it’s incredibly useful to see the exact requests being sent to the LLM and the responses coming back. This helps with debugging prompts and understanding the model’s behavior. In our project, we enabled this detailed logging using the Logbook library with three simple steps:

- We added the

logbook-spring-boot-starterdependency to ourpom.xml. - We configured a

RestClientCustomizerbean in our main application class to integrate Logbook with Spring’s HTTP client. - We set the logging level for Logbook to

TRACEin ourapplication.yml.

With this setup, every call made by the ChatClient is automatically logged to the console.

For a more in-depth guide on this topic, you can read our detailed article: Spring AI Log Model Requests and Responses – 3 Easy Ways

👉 Sample Request Log

Here is an example of the request log printed to the console. You can clearly see our system prompt, the user’s text prompt, and the image data (shortened for brevity) being sent to the model.

2025-07-20T16:35:35.780+05:30 TRACE 1297 --- [nio-8080-exec-1] org.zalando.logbook.Logbook : {

"origin": "local",

"type": "request",

"correlation": "a51234f3ce9934dd9b",

"method": "POST",

"uri": "https://generativelanguage.googleapis.com/v1beta/openai/chat/completions",

"body": {

"messages": [

{

"content": "You are an AI assistant that specializes in image analysis...",

"role": "system"

},

{

"content": [

{ "type": "text", "text": "What do you understand from this image?" },

{

"type": "image_url",

"image_url": {

"url": "data:image/jpeg;base64,/9j/4QC8RXhpZgAASUkqAAgA........."

}

}

],

"role": "user"

}

],

"model": "gemini-2.0-flash-exp"

}

}

👉 Sample Response Log

Here is the corresponding response from the LLM, showing a successful status: 200, and the model’s full analysis, which is invaluable for troubleshooting.

2025-07-20T16:35:40.746+05:30 TRACE 1297 --- [nio-8080-exec-1] org.zalando.logbook.Logbook : {

"origin": "remote",

"type": "response",

"correlation": "a51234f3ce9934dd9b",

"duration": 4982,

"status": 200,

"body": {

"choices": [

{

"finish_reason": "stop",

"message": {

"content": "The image is an advertisement for \"Spring AI Observability,\" which appears to be a tool or feature that provides insights into chat client metrics and prompt logging...",

"role": "assistant"

}

}

],

"usage": {"completion_tokens": 198, "prompt_tokens": 1885, "total_tokens": 2083}

}

}8. Video Tutorial

If you prefer visual learning, check out our step-by-step video tutorial. It walks you through this versatile Image Analysis API from scratch, demonstrating how to handle different image sources and interact with a multimodal LLM using Spring AI.

📺 Watch on YouTube:

9. Source Code

You can find the complete, working code for this project on our GitHub. Feel free to clone the repository, add your own API key, and run it on your machine. It’s a great way to experiment with the code and see how everything works together.

🔗 Spring AI Image Analysis Cookbook: https://github.com/BootcampToProd/spring-ai-image-analysis-cookbook

10. Things to Consider

When implementing this solution in production, keep these important points in mind:

- Cost: Calling LLM APIs costs money. Monitor your usage and consider implementing rate limiting or quotas.

- Security: Be cautious when processing images from public URLs. Validate URLs to prevent server-side request forgery (SSRF) attacks. Sanitize all user inputs. Also, validate file sizes and types to prevent malicious uploads.

- Error Handling: Our example has basic error handling. A production app would need more robust logging and potentially a retry mechanism for transient network errors.

- Performance: Downloading images from URLs or decoding large Base64 strings can be time-consuming. For high-throughput applications, consider processing requests asynchronously in a separate thread pool or message queue.

- Model Selection: We used a specific Gemini model. Different models have different capabilities, costs, and rate limits. Spring AI makes it easy to swap models by changing your

pom.xmlandapplication.yaml, so you can experiment to find the best fit. - Memory Usage Monitoring: Track memory usage when handling large images to avoid OOMs.

- Usage Alerts: Monitor and alert on AI API usage to prevent budget overruns.

- Image Resizing Strategies: Resize or compress images before analysis to cut per‑call costs.

11. FAQs

Can I use a different AI model like Anthropic’s Claude or a model from Ollama?

Absolutely. Spring AI is designed to be portable. You would replace the spring-ai-starter-model-openai dependency with the appropriate one and update your application.yaml with the credentials and settings for that model.

How do I handle large images?

Consider resizing images before sending them to the AI service. Most models have size limits, and smaller images reduce API costs and processing time.

Can I analyze multiple images in one request?

Yes! All endpoints except the classpath one support multiple images. The AI will analyze all images together and provide a combined response.

Is this suitable for real-time applications?

AI analysis can take longer depending on image complexity and model choice. Consider async processing for a better user experience.

What happens if I send a prompt that has nothing to do with the image?

In our implementation, the system prompt (SYSTEM_PROMPT_TEMPLATE) specifically instructs the AI to only answer questions related to the images. If the prompt is unrelated, it is instructed to return a specific error message, which our service code then catches and uses to throw an exception. This is a basic form of “guardrailing.”

12. Conclusion

We’ve built a full-featured image analysis API that demonstrates the power of multimodal AI integration. This service showcases how modern applications can leverage AI to understand and interpret visual content, opening doors to countless possibilities from e-commerce automation to accessibility improvements. The flexible architecture supporting multiple image input methods makes it adaptable to various use cases, while the clean separation of concerns ensures maintainability and extensibility for future enhancements.

13. Learn More

Interested in learning more?

Spring AI Observability: A Deep Dive into Chat Client Metrics and Prompt Logging

Add a Comment