Learn how to build smarter conversational applications using Spring AI Chat memory. Complete guide with code examples using InMemoryChatMemory.

1. Introduction

Imagine you’re building a chatbot for your company’s website. A user logs in and asks, “What are your business hours?” Your AI assistant responds with the opening times. Then, the user follows up with, “Do you offer weekend support?”

Without chat memory, your AI would have no idea what “you” refers to or that the question relates to the previous one about business hours. This disconnected experience frustrates users and limits the potential of your AI application.

This is where chat memory comes in—a crucial yet often overlooked component that makes conversations better by remembering what was said before.

In this guide, I’ll walk you through implementing chat memory in Spring AI applications, with a special focus on InMemoryChatMemory. Whether you’re building your first AI-powered chat application or looking to enhance an existing one, this guide will help you create more natural, context-aware conversational experiences.

2. What is Spring AI Chat Memory?

Chat memory refers to the ability of a conversational AI system to remember previous interactions within a conversation. Without memory, each message would be treated in isolation, forcing users to repeat information and preventing the AI from maintaining context. Spring AI Chat Memory provides several memory implementations to help your applications maintain meaningful, contextual conversations.

3. Why is Chat Memory Necessary?

Before diving into implementation details, it’s important to understand why chat memory is crucial:

- Contextual Understanding: Memory allows the AI to understand references to previous parts of the conversation.

- Natural Conversation Flow: Humans naturally reference previous statements in conversations. AI systems need memory to mimic this natural flow.

- Personalization: Remembering user preferences and information creates more personalized experiences.

- Reduced Repetition: Without memory, users would need to repeat information in every message.

- Complex Task Handling: Many tasks require multiple steps of interaction, which is impossible without memory.

- Token Efficiency: By managing what gets sent to the AI model, memory systems help optimize token usage and costs.

4. How Spring AI Implements Chat Memory?

Spring AI provides a straightforward approach to chat memory through its ChatMemory interface. The ChatMemory interface is designed to manage the storage and retrieval of chat conversation history. It offers several methods for interacting with the stored messages.

The key methods in the ChatMemory interface are:

public interface ChatMemory {

// Adds a single message to the conversation

default void add(String conversationId, Message message) {

this.add(conversationId, List.of(message));

}

// Adds multiple messages to the conversation

void add(String conversationId, List<Message> messages);

// Retrieves the last N messages from the conversation

List<Message> get(String conversationId, int lastN);

// Clears the conversation history for a given conversation ID

void clear(String conversationId);

}

Explanation:

add(): This method allows you to add messages to a conversation. You can add a single message using the default method or multiple messages with the otheradd()method.get(): This method lets you retrieve a specific number of recent messages from a conversation, making it easy to maintain context.clear(): Clears all the stored messages for a specific conversation, which can be useful when you no longer need to keep the history.

This interface provides a clean and efficient way to handle chat history, making it easy to manage conversations in Spring AI applications.

5. Available Memory Implementations in Spring AI

Spring AI offers multiple memory implementations, allowing you to choose the most suitable one based on your application’s requirements. Here are the available implementations:

InMemoryChatMemory: This implementation stores conversations in the application’s memory, making it fast and efficient for short-term storage. It is ideal for scenarios where persistent storage is not required.JdbcChatMemory: Persists conversations in a relational database. This option is useful for applications that need to store conversations across sessions and provide durability.CassandraChatMemory: Stores conversations in Cassandra, a distributed NoSQL database, with support for time-to-live (TTL) for automatic expiration of old messages. This is perfect for scalable applications needing high availability.Neo4jChatMemory: Persists conversations in a Neo4j graph database. This implementation is beneficial when you want to model the conversation data in a graph structure, allowing for complex relationship queries between messages.

In this blog post, we’ll focus primarily on the InMemoryChatMemory implementation, which is commonly used for its simplicity and speed when persistence is not a major concern.

6. Setting Up Demo Project

First, let’s set up a basic Spring Boot project with the necessary dependencies and configurations.

Step 1: Add Spring AI Dependencies to pom.xml

Start by adding the necessary Spring AI dependencies in your pom.xml:

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>In this configuration:

spring-boot-starter-web:Enables us to build a web application with REST endpointsspring-ai-starter-model-openai:Provides integration with OpenAI’s API (though we’ll configure it for Google Gemini)spring-ai-bom:ThedependencyManagementsection uses Spring AI’s Bill of Materials (BOM) to ensure compatibility between Spring AI components.

Step 2: Configure Application Properties

Open your application.yml file and add the following configurations, replacing <your-gemini-api-key> with your API key:

spring:

application:

name: spring-boot-ai-chat-memory

ai:

openai:

api-key: "<ypur-gemini-api-key>"

base-url: https://generativelanguage.googleapis.com/v1beta/openai

chat:

completions-path: /chat/completions

options:

model: gemini-2.0-flash-exp

This configuration uses Spring AI’s adaptability to connect to Google’s Gemini model, even though we’re using the OpenAI starter. The base-url and completions-path settings direct requests to Google’s API instead of OpenAI’s.

Google Gemini APIs are great for proof-of-concept (POC) projects since they offer limited usage without requiring payment. For more details, check out our blog, where we dive into how Google Gemini works with OpenAI and how to configure it in case of our Spring AI application.

7. Implementing InMemoryChatMemory in Spring AI

Now, let’s dive into the heart of our topic – implementing chat memory. We’ll start with InMemoryChatMemory, which stores conversation history in memory (RAM).

Step 1: Configuring the Chat Client with Memory

First, we need to create a configuration class to set up our ChatClient with memory capabilities:

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.client.advisor.MessageChatMemoryAdvisor;

import org.springframework.ai.chat.memory.ChatMemory;

import org.springframework.ai.chat.memory.InMemoryChatMemory;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class ChatClientConfig {

@Bean

public ChatClient chatClient(ChatClient.Builder chatClientBuilder, ChatMemory chatMemory) {

return chatClientBuilder

.defaultAdvisors(new MessageChatMemoryAdvisor(chatMemory))

.build();

}

@Bean

public ChatMemory chatMemory() {

return new InMemoryChatMemory();

}

}Let’s break down what’s happening here:

- We’re defining two Spring beans:

chatMemoryandchatClient - The

chatMemorybean creates an instance ofInMemoryChatMemory, which will store conversations in RAM - The

chatClientbean uses a builder pattern to create a chat client with theMessageChatMemoryAdvisor

The MessageChatMemoryAdvisor is a crucial component here. It:

- Automatically captures user messages and AI responses

- Stores them in the provided chat memory implementation

- Makes past conversations available to the AI for context in future interactions

This advisor handles all the memory management work behind the scenes, so you don’t need to manually save each message.

Step 2: Building a Simple Chat Controller

With our configuration in place, let’s create a basic REST controller to interact with our chatbot:

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.memory.ChatMemory;

import org.springframework.ai.chat.messages.Message;

import org.springframework.web.bind.annotation.DeleteMapping;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

@RestController

@RequestMapping("/api/chat")

public class SimpleInMemoryChatController {

private final ChatClient chatClient;

private final ChatMemory chatMemory;

public SimpleInMemoryChatController(ChatClient chatClient, ChatMemory chatMemory) {

this.chatClient = chatClient;

this.chatMemory = chatMemory;

}

// Endpoint to send messages and get responses

@PostMapping

public String chat(@RequestBody String request) {

return chatClient.prompt()

.user(request)

.call()

.content();

}

// Endpoint to view conversation history

@GetMapping("/history")

public List<Message> getHistory() {

return chatMemory.get("default", 100);

}

// Endpoint to clear conversation history

@DeleteMapping("/history")

public String clearHistory() {

chatMemory.clear("default");

return "Conversation history cleared";

}

}This controller provides three endpoints:

POST /api/chat– To send a message and get a responseGET /api/chat/history– To view the conversation historyDELETE /api/chat/history– To clear the conversation history

Notice the use of the “default” conversation ID when accessing memory. InMemoryChatMemory uses this ID as the default identifier for conversations when none is specified.

🧩 Chat Memory in Action: Key Endpoints Explained

a. Send Message – POST /api/chat

How It Works: When a user sends a message via the /api/chat endpoint:

- The chatClient.prompt().user(request).call() method is triggered.

- It takes the user’s input message from the request.

- The MessageChatMemoryAdvisor automatically retrieves any previous messages stored in memory for the “default” conversation.

- It sends both the new user message and the previous context to the configured AI model (e.g., Google Gemini).

- The AI processes the full conversation context and returns a response.

- Both the user’s message and the AI’s response are stored in memory to maintain conversation flow for future interactions.

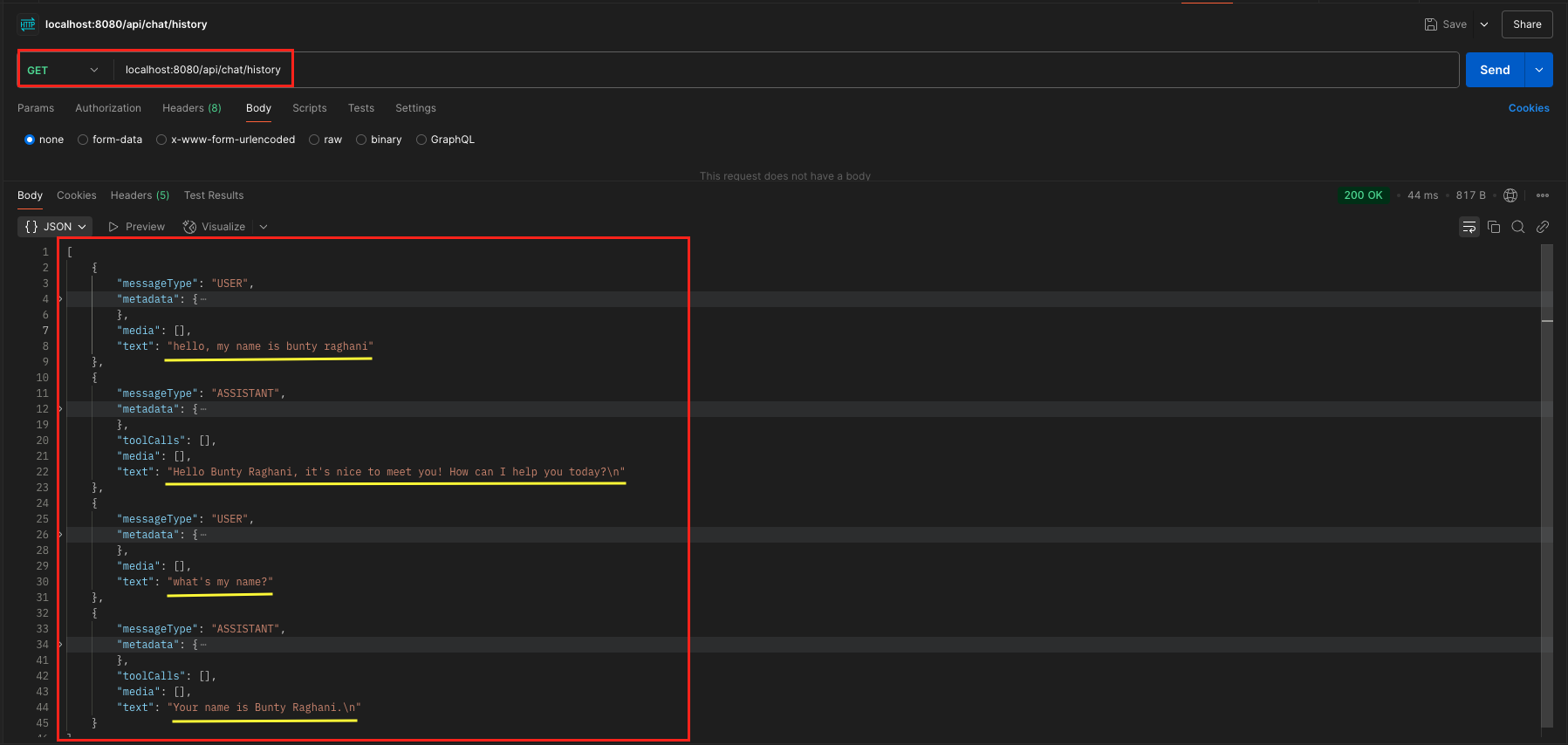

b. View Chat History – GET /history

How It Works: When a user calls the /history endpoint:

- The chatMemory.get(“default”, 100) method retrieves up to 100 recent messages from the in-memory store.

- These messages include both user inputs and AI responses from the “default” conversation.

- This helps you understand the full conversation context currently held in memory, which is useful for debugging or displaying chat history in the UI.

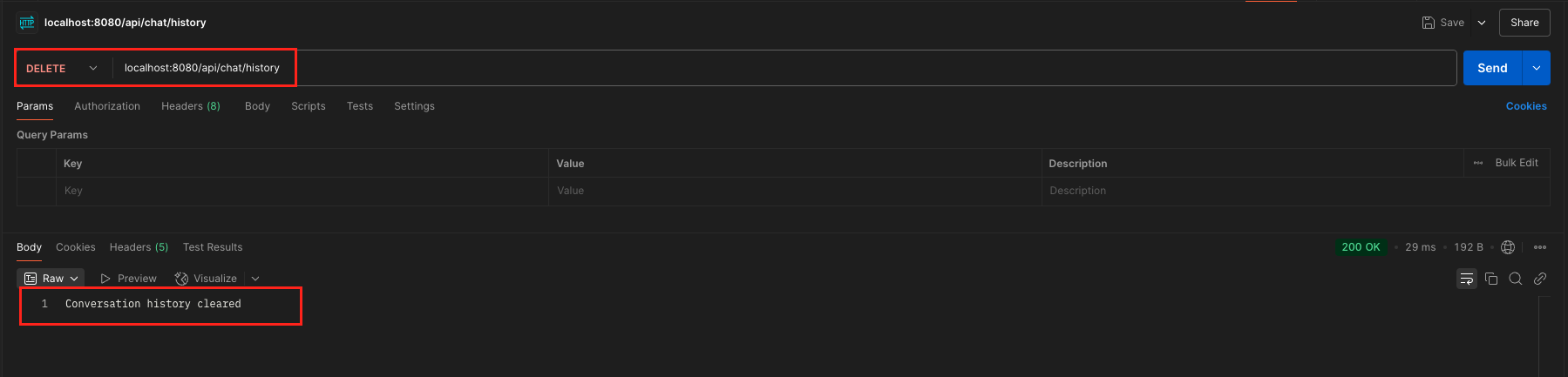

c. Clear Chat History – DELETE /history

How It Works: When the /history endpoint is called with the DELETE method:

- The chatMemory.clear(“default”) method wipes all stored messages for the “default” conversation.

- This removes any context previously stored—essentially resetting the chat memory.

- It’s useful when starting a new conversation or when you want the AI to forget the previous context.

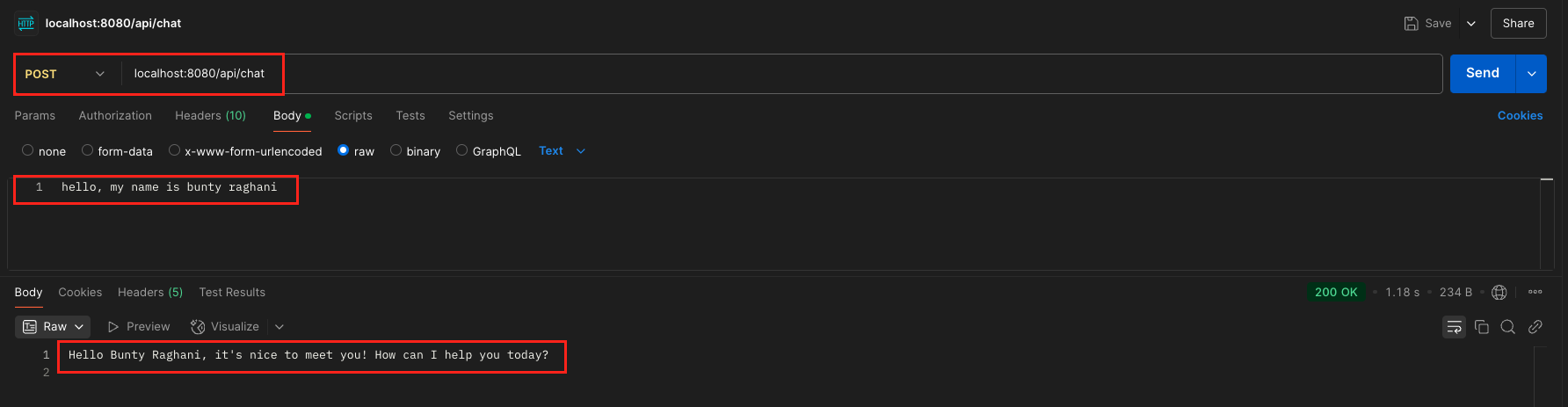

Step 3: Verify the output

Now that we’ve set up our endpoints, let’s test how chat memory works in action.

- Send an initial message

- 💬

POST /api/chatwith:"hello, my name is bunty raghani" - 🤖 LLM responds with a greeting and remembers the name.

- 💬

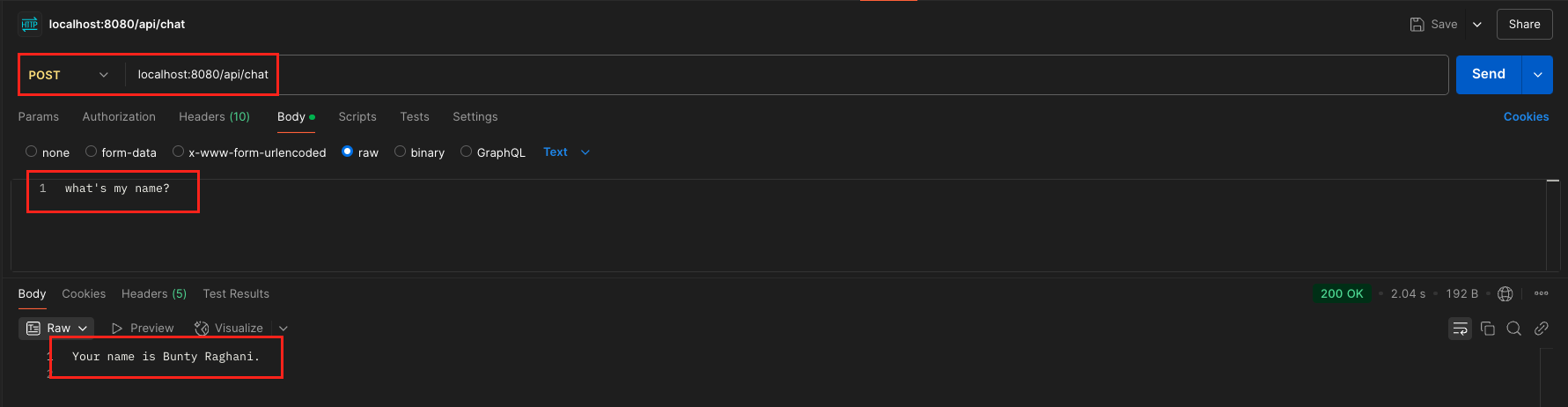

- Ask a follow-up

- 💬

POST /api/chatwith:"what's my name?" - 🤖 LLM replies:

"Your name is Bunty Raghani."(thanks to memory)

- 💬

- Check memory

- 💬

GET /api/chat/history - 🤖 Returns full conversation so far.

- 💬

- Clear the memory

- 🧹

DELETE /api/chat/history - 🤖 Returns:

"Conversation history cleared"

- 🧹

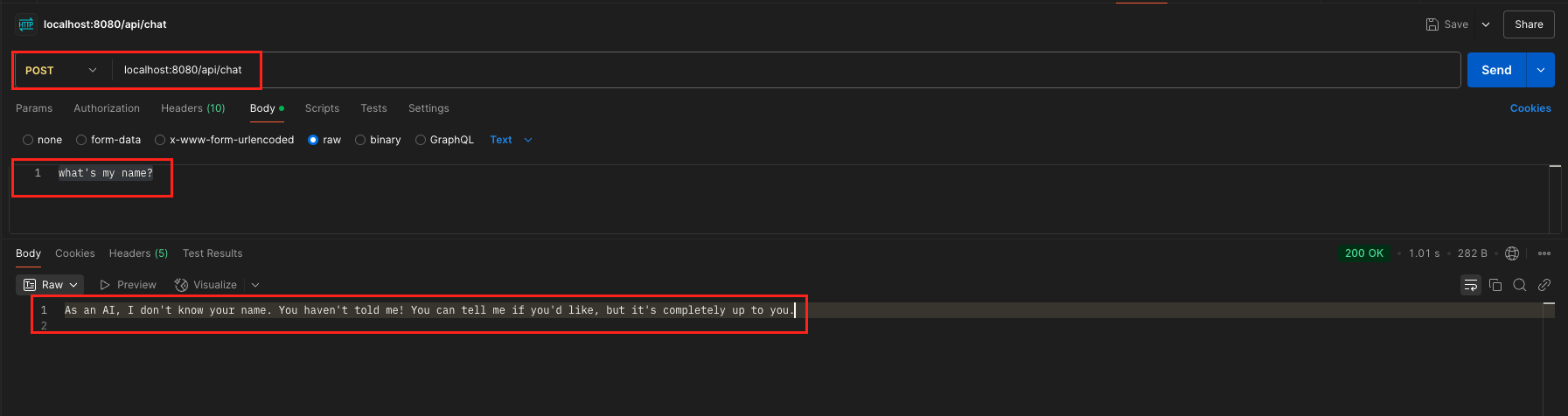

- Test again after clearing

- 💬

POST /api/chatwith:"what's my name?" - 🤖 LLM responds:

"As an AI, I don't know your name. You haven't told me! You can tell me if you'd like, but it's completely up to you."

- 💬

This confirms that the chat memory is working as intended — storing, retrieving, and resetting context effectively.

This simple implementation works well for applications with a single conversation stream. But what about applications with multiple users, each needing their own conversation history? Let’s find out in the next section.

8. Multi-User Chat Memory Implementation

When your application needs to handle multiple users, each with their own conversation history, storing all chats in a single memory won’t work. Instead, we need to isolate conversations by a unique identity like a user ID.

Here’s how to implement that:

Before we dive into the steps, just a quick note — the ChatClientConfig stays the same, just like we explained above.

Step 1: Building a Chat Controller

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.memory.ChatMemory;

import org.springframework.ai.chat.messages.Message;

import org.springframework.web.bind.annotation.DeleteMapping;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

import static org.springframework.ai.chat.client.advisor.AbstractChatMemoryAdvisor.CHAT_MEMORY_CONVERSATION_ID_KEY;

@RestController

@RequestMapping("/api/users")

public class MultiUserInMemoryChatController {

private final ChatClient chatClient;

private final ChatMemory chatMemory;

public MultiUserInMemoryChatController(ChatClient chatClient, ChatMemory chatMemory) {

this.chatClient = chatClient;

this.chatMemory = chatMemory;

}

@PostMapping("/{userId}/chat")

public String chat(

@PathVariable String userId,

@RequestBody String request) {

return chatClient

.prompt()

.advisors(advisorSpec -> advisorSpec.param(CHAT_MEMORY_CONVERSATION_ID_KEY, userId))

.user(request)

.call().content();

}

@GetMapping("/{userId}/history")

public List<Message> getHistory(@PathVariable String userId) {

return chatMemory.get(userId, 100);

}

@DeleteMapping("/{userId}/history")

public String clearHistory(@PathVariable String userId) {

chatMemory.clear(userId);

return "Conversation history cleared for user: " + userId;

}

}

The key difference here is how we’re using the userId as a conversation identifier:

- In the chat endpoint, we use

.advisors(advisorSpec -> advisorSpec.param(CHAT_MEMORY_CONVERSATION_ID_KEY, userId))to tell the MessageChatMemoryAdvisor which conversation ID to use. In our case, the userId path variable acts as the conversation ID. - The history and clear endpoints now work with user-specific conversation IDs instead of the default one.

This approach allows each user to have their own isolated conversation history, creating personalized experiences for everyone.

Step 2: Verify the Output

Let’s test how chat memory works when handling multiple users. We’ll walk through two separate conversations — one for Alice and one for Bob — and see how their chat histories are independently managed.

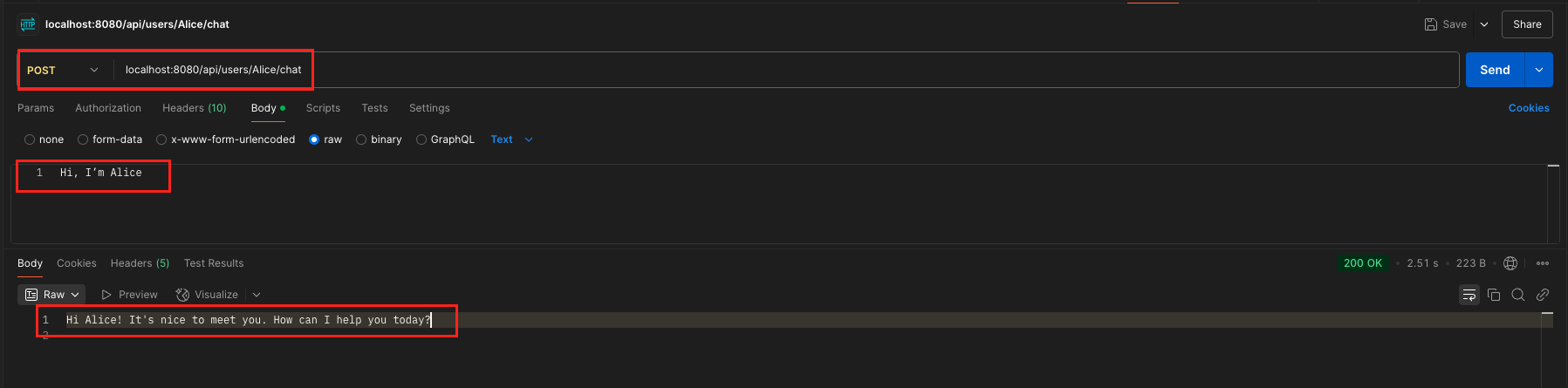

🧑💻 Alice’s Conversation Flow

- Send a message

💬 Alice says: “Hi, I’m Alice”

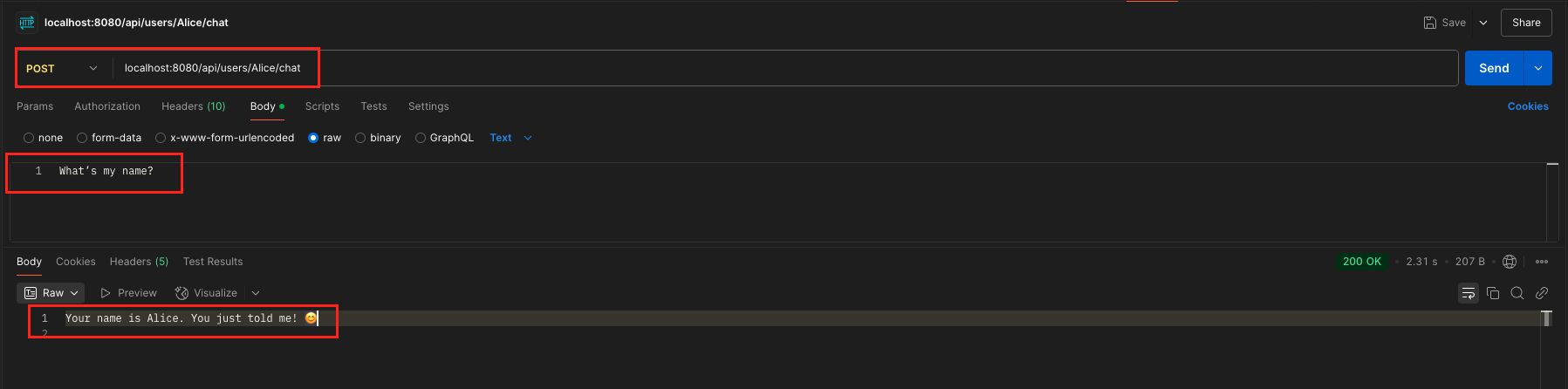

🤖 The LLM replies: “Hi Alice! It’s nice to meet you. How can I help you today?” - Ask for name

💬 Alice says: “What’s my name?”

🤖 The LLM responds using memory: “Your name is Alice. You just told me! 😊”

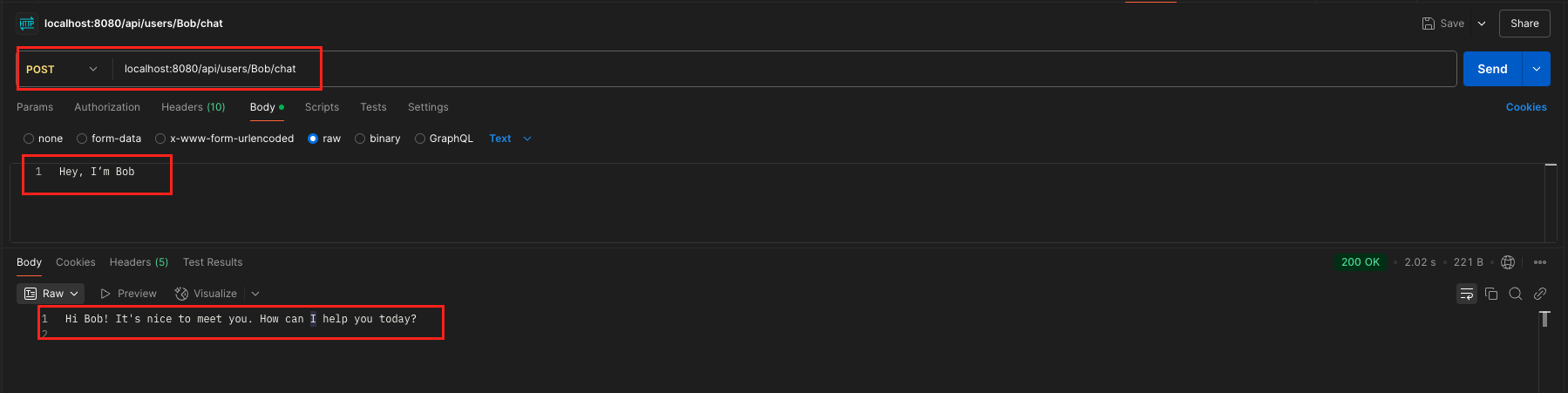

🧑💻 Bob’s Conversation Flow

- Send a message

💬 Bob says: “Hey, I’m Bob”

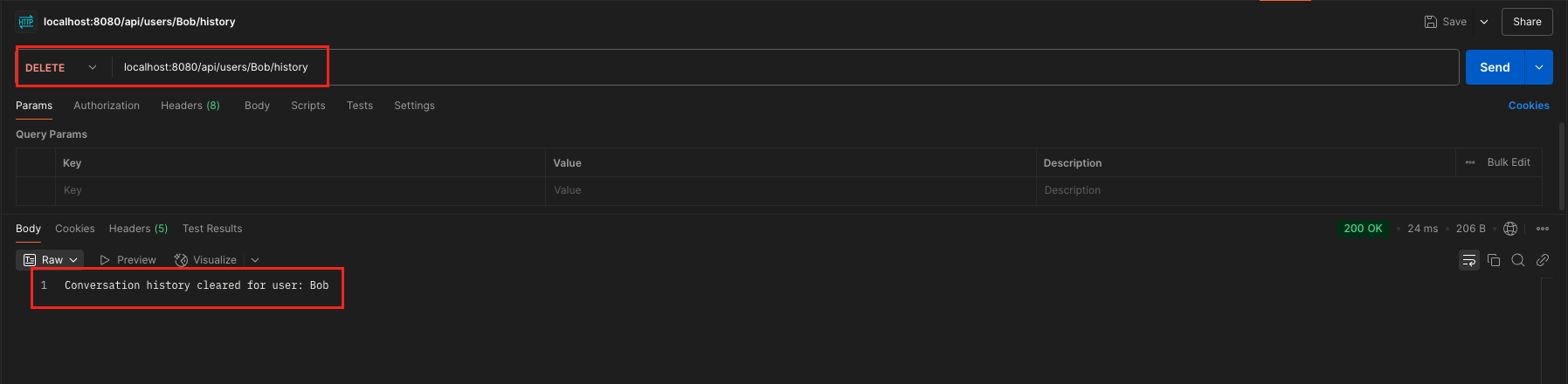

🤖 The LLM replies: “Hi Bob! It’s nice to meet you. How can I help you today?” - Clear Bob’s memory

🧹 Call DELETE /api/users/Bob/history

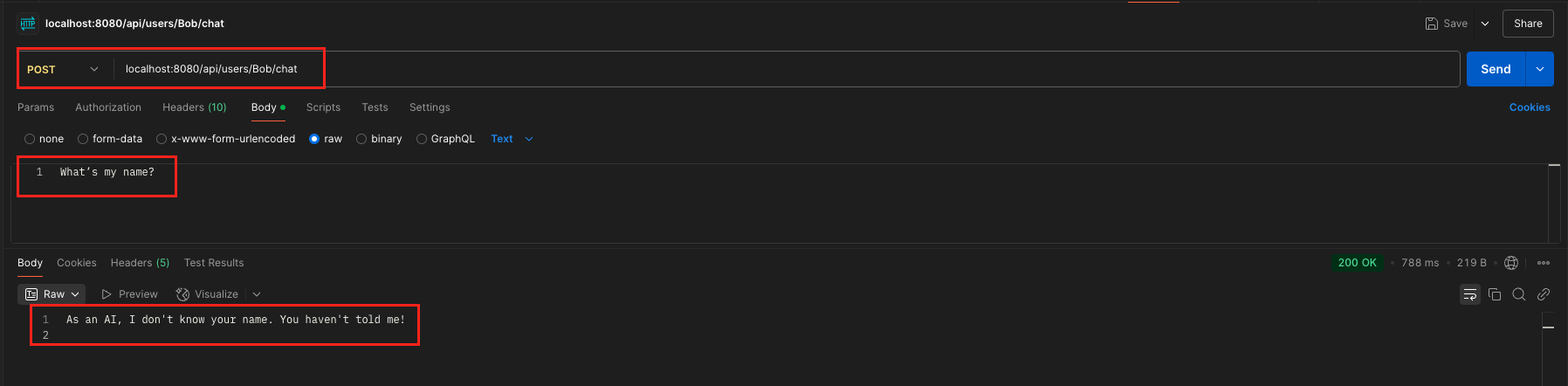

🤖 Response: “Conversation history cleared for user: Bob” - Ask for name

💬 Bob says: “What’s my name?”

🤖 Since Bob’s memory was cleared, the LLM says: “As an AI, I don’t know your name. You haven’t told me!”

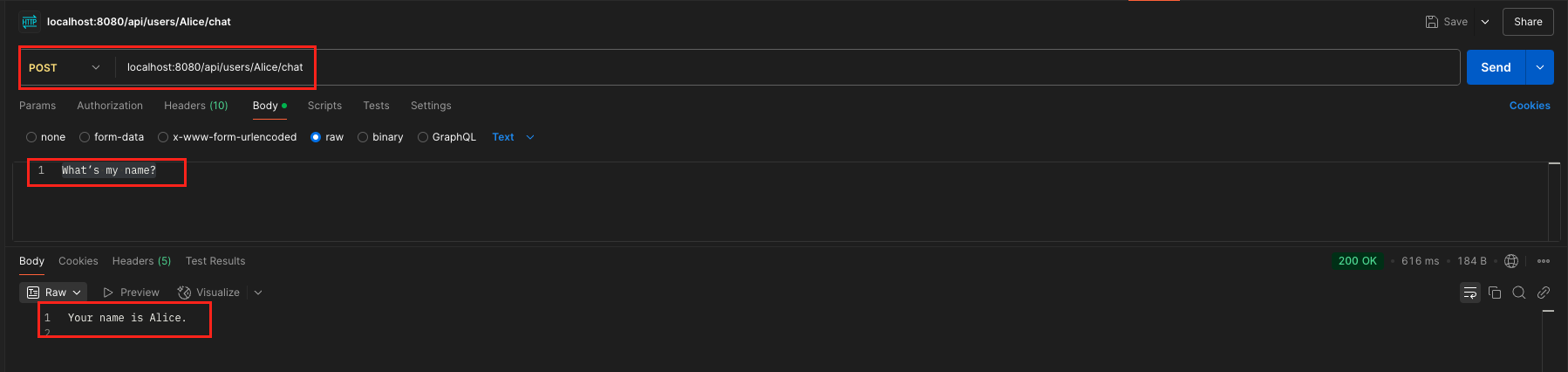

🔁 Alice’s Memory Is Still Intact

- Ask again

💬 Alice says: “What’s my name?”

🤖 The LLM responds correctly: “Your name is Alice.”

This confirms that Alice’s conversation memory remains intact and unaffected by Bob’s actions — proving that each user has their own isolated memory space.

9. Pros and Cons of InMemoryChatMemory

✅ Pros

- Easy to set up: No special configurations or dependencies required. You can start using it right away with minimal code.

- No external infrastructure needed: Unlike databases or caches, it runs entirely in the app’s memory—no need to connect to any external systems.

- Fast access and response time: Since all data is stored in memory, reading and writing chat history is extremely quick and efficient.

⚠️ Cons

- Data is lost on app restart: All stored conversation history disappears when you restart or redeploy the application.

- Not suitable for multiple instances: In distributed systems (like in cloud environments with multiple app instances), each instance has its own separate memory. That means users may lose context if they get routed to a different instance.

- Unbounded memory growth: If you don’t implement limits or cleanup strategies, memory can grow indefinitely as more users chat, potentially leading to performance issues over time.

10. Video Tutorial & Source Code

If you prefer visual learning, check out our step-by-step video tutorial demonstrating the complete Spring AI InMemoryChatMemory implementation for both single and multi-user scenarios.

📺 Watch on YouTube:

🧑💻 And if you’d like to try it yourself, you can find the complete example implementations discussed in this blog on GitHub:

👉 Spring Boot AI In-Memory Chat Memory: View on GitHub

11. Best Practices for Managing Chat Memory

As your application grows, consider these best practices for managing chat memory effectively:

- Memory Cleanup: Implement a strategy to clean up old conversations, especially for InMemoryChatMemory to prevent memory leaks.

- Message Limits: Set appropriate limits on how many messages to retrieve from history to balance context quality and performance.

- Privacy Considerations: Be transparent with users about how their conversation data is stored and used.

- Conversation Segregation: Always use unique conversation IDs for different users or conversation contexts.

- Error Handling: Implement robust error handling for memory operations, especially for external storage implementations.

- Memory Size Monitoring: Keep an eye on the size of your memory storage, especially for production applications with many users.

12. Things to Consider

When implementing chat memory in your Spring AI applications, consider these important factors:

- Memory Persistence: InMemoryChatMemory is lost if your application restarts. For applications requiring persistence across restarts, consider database-backed implementations.

- Scaling Considerations: In a multi-instance environment, InMemoryChatMemory won’t be shared between instances. Consider database implementations for clustered deployments.

- Context Window Limitations: AI models have token limits for their context windows. Too much history can exceed these limits, so be strategic about how much history you include.

- Security Implications: Chat histories may contain sensitive information. Ensure your storage method meets your security requirements.

- Performance Impact: Retrieving and processing large conversation histories can impact response times. Monitor and optimize as needed.

13. FAQs

Can I customize how much history is sent to the AI model?

Yes! When retrieving history with chatMemory.get(conversationId, lastN), the lastN parameter lets you specify the number of most recent messages to fetch, giving you control over how much of the conversation history is retrieved.

Will my chat history survive application restarts with InMemoryChatMemory?

No, InMemoryChatMemory stores data in RAM, which is cleared when the application stops. Use database-backed implementations for persistence.

Can I pre-load conversation history?

Yes! You can manually add messages to ChatMemory implementation:

ChatMemory memory = new InMemoryChatMemory();

memory.add(new SystemMessage("You are a helpful assistant."));

memory.add(new UserMessage("My name is Sarah."));

memory.add(new AssistantMessage("Hello Sarah, how can I help you today?"));What happens when memory is cleared?

When you call memory.clear(), all messages are removed from the memory implementation. The next message will start a fresh conversation.

14. Conclusion

Chat memory plays a big role in making AI conversations feel natural and connected. With Spring AI and its simple InMemoryChatMemory option, you can quickly build chat features that remember what users say and respond with better context. In this blog, we looked at how to set up chat memory for both single and multiple users, talked about different memory types, and shared a few things to keep in mind while using them. Starting with in-memory memory is a great first step, and as your app grows, you can switch to more advanced options when needed. The main goal is to make your AI chats smarter and more helpful—and memory is what makes that possible.

15. Learn More

Interested in learning more?

Spring AI Log Model Requests and Responses – 3 Easy Ways

Add a Comment